One of the more surprising features in iOS 16 is the ability to cut out people from a picture (or a dog, a car, whatever’s in focus) and copy it into another app. You can send it in iMessage, paste it in a photo editing app, or use Universal Clipboard to paste it on a nearby iPad or Mac.

What’s it for? Well, it’s great for making stickers for WhatsApp and Snapchat, plus it’s a hell of a lot of fun. If you’re putting together a YouTube thumbnail or making memes, it can significantly cut down the time you spend precisely cutting out edges, but it’s by no means precise enough to use professionally.

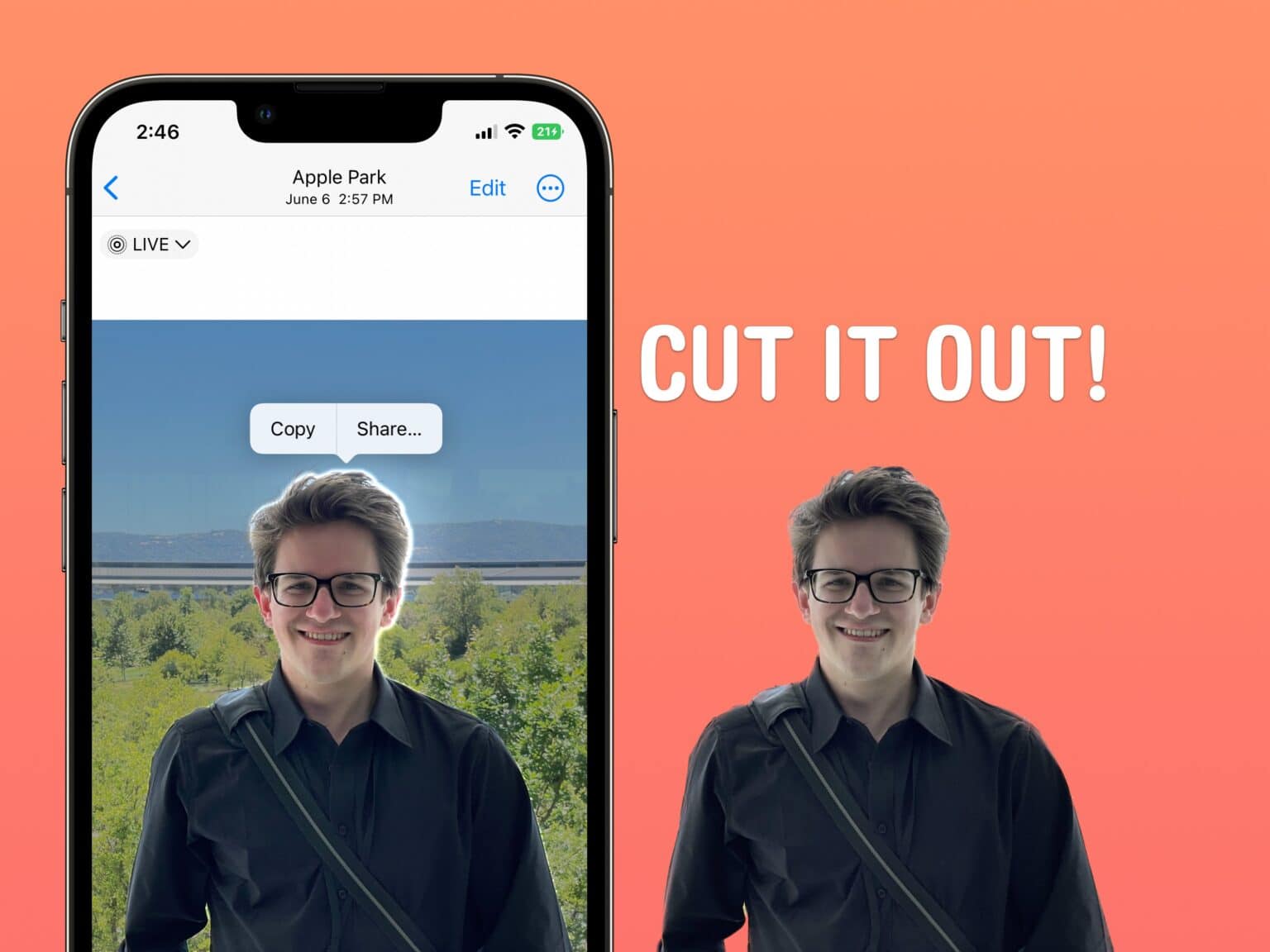

How to select, copy and share subjects from photos in iOS 16

Image: Apple

Speculation is that Apple first developed the subject-selecting-technology — technically part of what the company calls Visual Look Up, which arrived in iOS 15 — for a different feature entirely.

People have noticed that when you position a picture just right on the new Lock Screen, the subject will slightly overlap the clock. This can’t depend on the depth information captured in the photo — lots of people have pictures taken on older iPhones, digital cameras, or from apps that don’t capture depth. Apple had to develop a general-purpose subject-selecting algorithm.

This means that you can select the subject out of any picture in your photo library, even pictures that you save online (or if I’m being realistic, pictures people poorly screenshot).

How to cut out people from a picture

Screenshot: D. Griffin Jones/Cult of Mac

First, you need to install iOS 16. iOS 16 is compatible with every iPhone released in 2017 and later: the iPhone 8, X, XS, 11, 12, 13, 14 models and iPhone SE (both second and third generation). iPadOS 16 and macOS Ventura will be released sometime later in October.

Open any picture in the Photos app. Tap and hold on a person, animal or any object, and you’ll see it pop and glow. You can tap Copy to paste it somewhere else or tap Share to send it over AirDrop, iMessage, Snapchat, etc.

You can also drag and drop the image across apps. Tap and hold on the subject, and as soon as it ‘pops,’ drag it away. You can use your other hand to switch apps and drop it somewhere else.

Examples of subjects copied from photos

To show how well it works, I’m going to put the original picture side-by-side with the cutout on a bright pink background. I’m not going to clean up or edit the cutouts at all. These examples show exactly how iOS copies the image.

A self-portrait

Photo: D. Griffin Jones/Cult of Mac

Let’s start with an easy one. This is a picture of me at Apple Park from WWDC22. It does a good job of cutting out my shirt and face; it only messes up on my hair. But to be fair, so do most barbershops.

Inanimate objects

Photo: D. Griffin Jones/Cult of Mac

This picture of my Prius in its natural state, covered in mud and salt, also fares well. The body of the car is trimmed perfectly. You might think the driver side mirror gets cut off, but it’s not visible from this angle in the original, either. It only struggles in finding the edge of the tires against the shadow.

Photo: D. Griffin Jones/Cult of Mac

Enough easy home runs; let’s give it a really hard one: this picture of my antique trumpet sitting on my beige laminate floor. The mouthpiece is separated enough from its shadow that it is easily isolated, but the middle of the instrument is much harder. A big portion of the shadow is kept in. The bell is cut out from its shadow, but not very confidently around the edges.

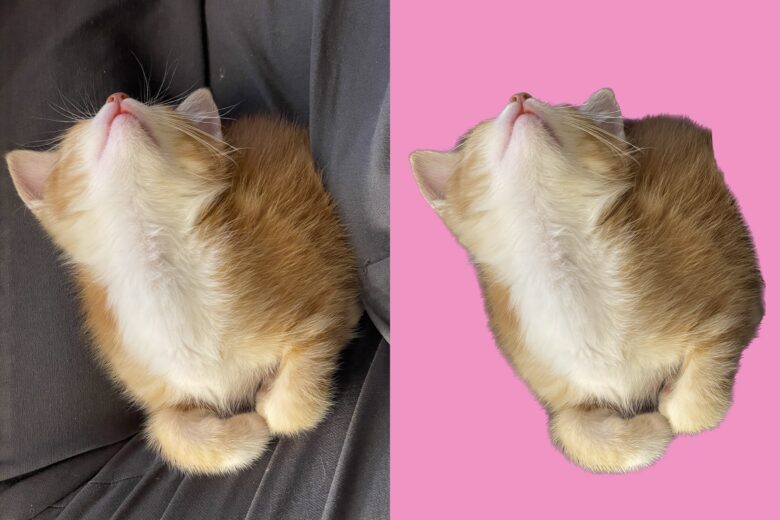

Animals

Photo: D. Griffin Jones/Cult of Mac

Let’s test animal hair. Lots of people will be using this feature on their pet cats and dogs. Raccoons have long, thick hair, so I was curious how this photo would turn out. There’s a bright-colored background, so it should be able to pick out strands of Smoot’s hair if it wanted to; we can see that it does not. It feathers and blurs the edge instead. It also keeps Smoot’s rock in the picture.

Photo: D. Griffin Jones/Cult of Mac

This picture of my kitten, Sushi, would also be a home run if only it was more confident in cutting around the hairs along the edge. The whiskers are completely trimmed off despite the high contrast against my dark gray slacks.

Food

Photo: D. Griffin Jones/Cult of Mac

Finally, let’s not forget pictures of food. Most food is, correct me if I’m wrong, served on plates. Plates are really easy to detect the edges of. Even in this photo, angled such that food obscures the back of the plate, iOS 16 easily finds the edges all the way around — although it trims out the saucer and toothpick in the back.

In case you’re wondering, this is a picture of a grilled cheese sandwich that has been battered, deep fried and coated in powdered sugar, served with a hearty pile of chips. This was taken at Melt, a restaurant wherein you may easily consume an entire week of calories in just one sitting. God bless America.

How iOS 16 photo cutout compares to Photoshop

Now, how much better does it look compared to what I can do by hand in Photoshop? Above is the official product photo for the QuickLoadz 24k Super 20, a shipping container-moving trailer. It takes about half an hour to trace and refine every path when using Photoshop to remove the background.

iOS 16, below, cuts out the image of the truck in just milliseconds, but you can see that it doesn’t get the edges quite right. It heavily blurs the bottom edge, where it has a hard time discerning the trailer from its shadow. Plus, the iOS 16 cutout totally removes the truck’s side mirror and front bumper.

Conclusion: Isolating images using iOS 16’s Visual Look Up is fast, fun

All in all, this feature proves quite fun. If you can cut out people from a picture in just seconds, you can step up your game when it comes to making memes or YouTube thumbnails. It may not yield professional results, but as Apple continues to invest year after year in machine learning models, it’ll only get better from here.

More iPhone photo tips and tricks:

The Photos app is full of tricks and hidden features: