The highlight of Apple’s recent AI efforts is Live Translation, but that’s not the only new Apple Intelligence feature unveiled at WWDC on Monday. There are also improvements to visual intelligence and Image Playground. Plus, third-party app developers can access Apple’s AI models for free.

But the keynote address kicking off the Mac maker’s developers conference was short on big AI-related announcements when compared to what’s coming out of OpenAI or Google. Still, the company did what it could.

“Last year, we took the first steps on a journey to bring users intelligence that’s helpful, relevant, easy to use, and right where users need it, all while protecting their privacy. Now, the models that power Apple Intelligence are becoming more capable and efficient, and we’re integrating features in even more places across each of our operating systems,” said Craig Federighi, Apple’s senior vice president of Software Engineering.

Apple AI at WWDC

Apple needed to walk a fine line when talking up its progress into artificial intelligence at WWDC. It got a black eye after unveiling an AI-enhanced version of Siri at WWDC24 that it has yet to launch, and might not go to users until 2026.

But the company couldn’t ignore artificial intelligence, either — it’s seen as critically important for every type of computer. So Apple did the best it could to hype its AI progress while having a limited number of announcements.

Live Translation on iPhone

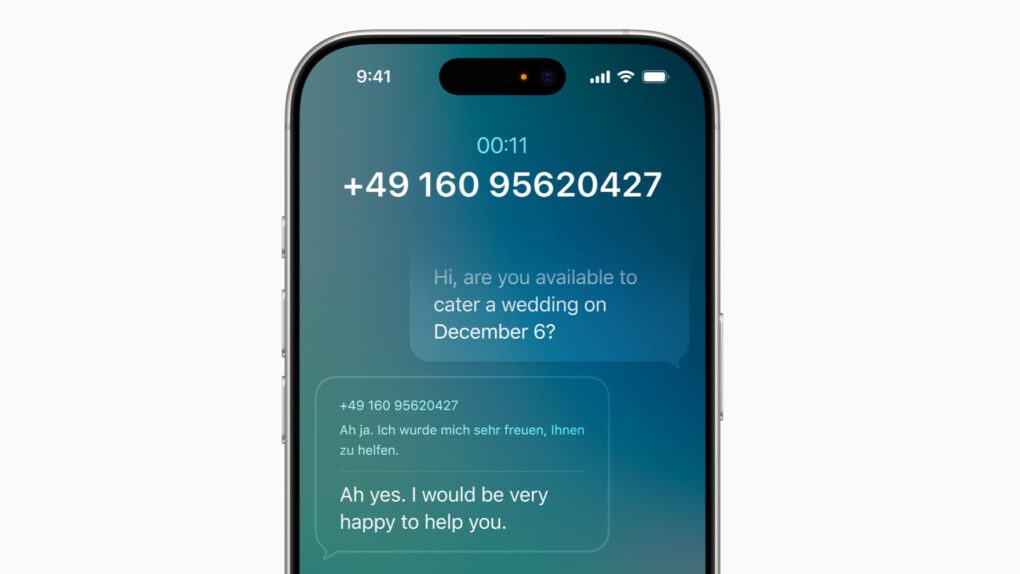

The shining star for Apple Intelligence at WWDC is Live Translation. This can change the language of spoken or written communication, depending on the application being used.

In the Messages app, the feature can translate text. During FaceTime calls, a user can read translated live captions while still hearing the speaker’s voice. On a regular phone call, the translation is spoken aloud throughout the conversation. At this point, Live Translation in the Phone and FaceTime apps is available for one-on-one calls in English (U.S., UK), French (France), German, Portuguese (Brazil), and Spanish (Spain).

Of course, this requires iOS 26 or iPadOS 26, which only developers can access at this time. The full releases are scheduled for autumn.

Updates to Genmoji and Image Playground

Apple Intelligence includes Genmoji, which allows users to create their own emojis. The free service is getting updated so users can mix together emojis and combine them with descriptions to create something new.

Image Playground lets iPhone, iPad, and Mac users create pictures from text descriptions — an area where Apple is currently way behind its rivals. The solution is to enable the application to send requests to OpenAI’s ChatGPT. Apple promises, “Users are always in control, and nothing is shared with ChatGPT without their permission.”

Again, the improvements to Genmoji and Image Playground are part of betas that won’t launch until autumn.

New visual intelligence features

The current version of Apple’s visual intelligence uses an iPhone’s camera to get information on an object in the real world. The WWDC keynote promised the feature is being expanded to work across the entire iPhone screen, allowing users to search and take action based on images in applications.

In addition, visual intelligence also recognizes when a user is looking at an event and can suggest adding it to their calendar.

Devs get free access to Apple’s LLMs

WWDC is a conference for developers, and Apple had AI-related news for them on Monday.

“Developers will be able to access the on-device large language model at the core of Apple Intelligence, giving them direct access to intelligence that is powerful, fast, built with privacy, and available even when users are offline,” the company said.

It’s making access free of cost to developers. The framework has native support for the Swift coding language.