When Washington Post tech columnist Geoffrey A. Fowler gave ChatGPT access to a decade of his Apple Watch data, he expected useful insights. Instead, the AI delivered wildly inconsistent health assessments that left him questioning the readiness of AI-powered health tools.

So far so bad: Apple Health ChatGPT integration

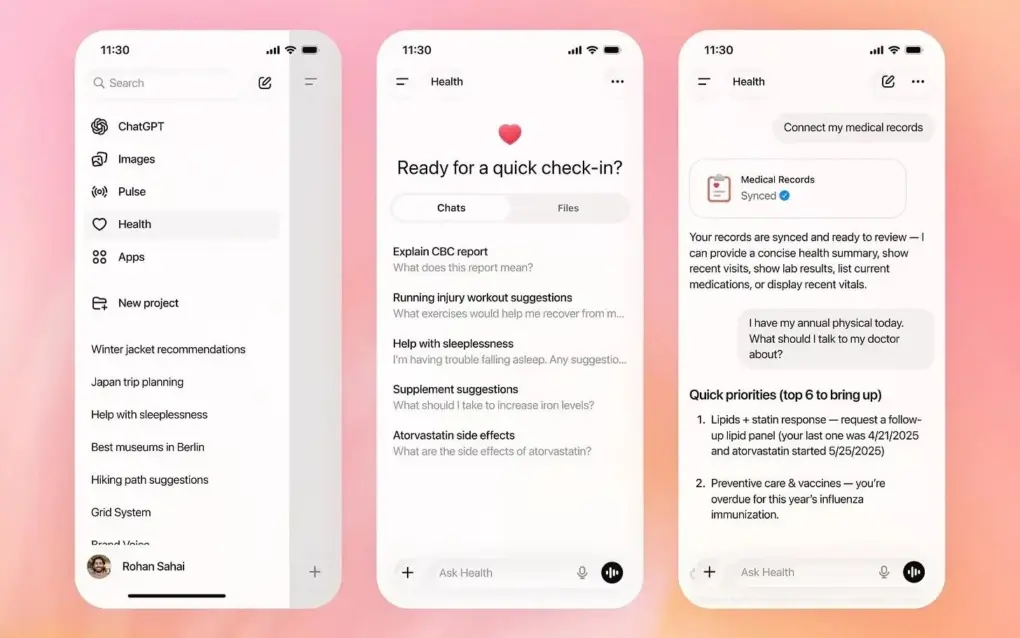

ChatGPT launched its ability to integrate Apple Health data on January 7, 2026. So Fowler connected ChatGPT Health to 29 million steps and 6 million heartbeat measurements stored in his Apple Health app, then asked the bot to evaluate his cardiac health. The results alarmed Fowler: ChatGPT gave him an F grade. Panicked, he went for a run and sent the report to his actual doctor.

His doctor’s response? “No,” Fowler wasn’t failing. In fact, his heart attack risk was so low that insurance likely wouldn’t cover additional testing to prove the AI wrong.

“The more I used ChatGPT Health, the worse things got,” Fowler wrote.

Questionable methodology raises red flags

Cardiologist Eric Topol of the Scripps Research Institute was equally unimpressed with ChatGPT’s analysis of Fowler’s data. Topol called the assessment “baseless” and said the tool is “not ready for any medical advice.”

The AI’s evaluation relied heavily on Apple Watch estimates of VO2 max and heart-rate variability — metrics that experts consider imprecise for clinical assessment. Apple says it collects an “estimate” of VO2 max, but measuring the real thing requires a treadmill and a mask. Independent research has found these estimates can run low by an average of 13%.

ChatGPT also flagged supposed increases in Fowler’s resting heart rate without accounting for the fact that new Apple Watch models may track differently. When Fowler’s real doctor wanted to assess cardiac health, he ordered a lipid panel — a test neither ChatGPT nor Anthropic’s competing Claude bot suggested.

Scores that changed with every question

Perhaps most troubling was the inconsistency. When Fowler asked the same question multiple times, his cardiovascular health grade swung wildly between F and B. The bot sometimes forgot basic information about him, including his gender and age, and occasionally failed to incorporate recent blood test results.

Topol said this randomness is “totally unacceptable,” warning that it could either unnecessarily alarm healthy people or give false reassurance to those with genuine health concerns.

OpenAI acknowledged the issue but couldn’t replicate the extreme variations Fowler experienced. The company explained that ChatGPT might weigh different data sources slightly differently across conversations when interpreting large health datasets.

Apple Health ChatGPT integration: Privacy concerns, regulatory gaps

Both OpenAI and Anthropic launched their health features as beta products for paid subscribers. While the companies claim these tools aren’t meant to replace doctors, both readily provided detailed cardiac assessments when asked.

OpenAI says ChatGPT Health takes extra privacy steps, including not using health data for AI training and encrypting the information. However, ChatGPT isn’t a health care provider, so it isn’t covered by the federal health privacy law known as HIPAA.

The regulatory landscape remains unclear. FDA Commissioner Marty Makary recently said the agency’s job is to “get out of the way as a regulator” to promote AI innovation. Both companies insist they’re just providing information, not making medical claims that would trigger FDA review.

For now, Fowler’s experience serves as a cautionary tale about trusting AI with health analysis — no matter how confident the chatbot sounds.