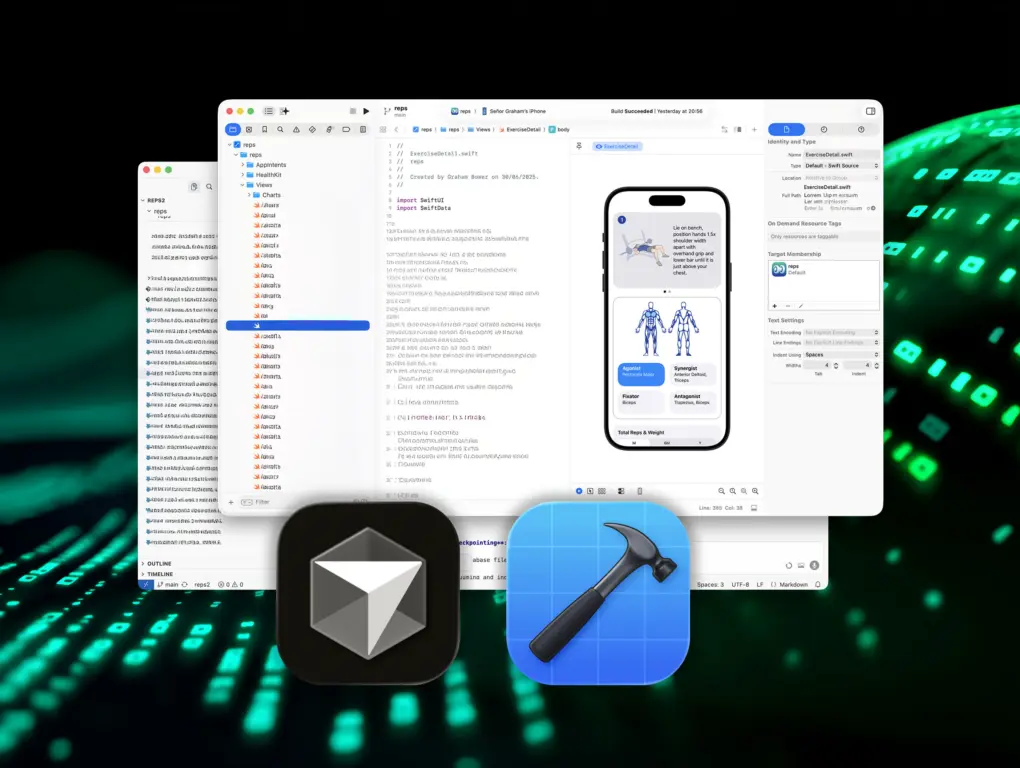

A year ago, I had no clue how to write an iPhone app. Now I’ve shipped a fully-fledged strength training app, built with AI coding tools, or “vibe coding” as it’s become known.

A lot of people get vibe coding wrong. They think it’s just for prototypes and messing around. It’s not. Used properly, it’s a skill you can learn and master. And with modern AI tools like Cursor, and the new Coding Assistant in Xcode, it’s now more accessible than ever.

So, if you’re curious about vibe coding and keen to give it a go, here are ten lessons I learned the hard way.

Mastering the art of vibe coding

Vibe coding is rapidly revolutionizing how software is made. It enables you to create apps without manually writing the code yourself. Instead, an AI assistant (sometimes called an “agent”) generates the code for you. In practice, that means describing what you want in a chat interface, similar to ChatGPT, built into coding tools like Xcode or Cursor.

It might seem silly to say this is a skill you can learn. After all, aren’t the AI assistants doing the heavy lifting?

Well, yes and no. Modern models can generate whole apps. But how you write prompts, evaluate the results, and guide the project makes all the difference.

So what skills did I actually bring to this?

I’m a graphic designer, not a developer. I can write HTML and CSS, but I’d never built a native app before. What I do have is two decades of experience designing apps and websites, and working closely with developers. I know how to brief technical people, and I’ve learned how engineers think.

I also designed an earlier version of Reps & Sets fourteen years ago, with my partner. So, I understood the product deeply. I even reused the original data model as a starting point. But I didn’t know Swift, and I’d never shipped an app myself.

At the time I started this project, Xcode’s AI features were limited, so I used Cursor as my AI coding environment, alongside models like Claude and GPT. I still relied on Xcode for asset management, debugging, testing, provisioning, and submitting to the App Store.

Top 10 tips for successful vibe-coding

Tip 1: Do your homework

You don’t need programming skills to use an AI coding assistant. But you do need to understand what it’s doing for you.

Before I started, I spent a few hours in Apple’s free Swift Playgrounds tutorials. I also binged WWDC sessions to understand how iOS apps are structured and how Apple’s UI components fit together.

Think of it like watching football. You don’t have to play the game, but if you don’t know the rules, you won’t understand what’s happening on the field.

Tip 2: Think in big chunks and small chunks

Apps are complicated. Trying to describe one in a single prompt would take thousands of words, and you’ll just confuse your AI assistant. Long prompts dilute attention. Important details get skipped. So, think about your project in big chunks, but brief your AI assistant in small ones.

As you break features down, you’ll discover there’s a logical order to building them. In my case, I had to build the exercises tab before I could build workout logging, because workouts depend on exercises. And even the exercises tab wasn’t one task. It had to be broken down further: list all exercises, display illustrations, filter by muscle group, and so on.

Tip 3: Ask questions

You don’t always have to tell your AI assistant what to do. Sometimes it’s better to ask it a question.

If it changes a file you weren’t expecting, ask for an explanation. Sometimes there’s a good reason. Sometimes there isn’t. Either way, you’ll learn something. And if you’re unsure how to implement a feature, ask for options before committing to one. The assistant often suggests approaches you might not have considered.

In Xcode, click the lightning icon in the Coding Assistant window to disable “Automatically apply code changes.” That lets you explore ideas and ask questions without the assistant touching your code until you’re ready. In Cursor, set the agent to Ask mode.

Tip 4: Use clean language

Most of the time, we don’t just describe a problem, we frame it. And inside that framing are hidden assumptions about what’s going on.

AI models are extremely sensitive to language. They don’t just read our words. They infer intent from how we structure them. If a prompt subtly assumes what the problem is, the model will often follow that path, even if it’s the wrong one. For example, you might describe a bug in a way that unintentionally narrows the search space. The AI then optimizes within that frame, instead of questioning it.

Clean language is a technique I learned years ago on a coaching course. Clean language came from psychotherapy and counseling but is increasingly used in business and education. The idea is simple: describe what you observe, without embedding interpretation. Give the other party, human or machine, room to reason for themselves.

It turns out that what works in psychotherapy works surprisingly well in vibe coding too.

Tip 5: Provide context

Don’t just tell your AI assistant what to build. Tell it why.

When humans build software, they make dozens of small judgment calls: naming functions, structuring data, choosing defaults. Those decisions only make sense if they understand the purpose behind the feature. The same is true for AI.

When you explain why something matters, the AI assistant can make better decisions on its own. And sometimes even surprise you. For example, when I added an option to specify the incline of a gym bench, Claude set the range from –20º to 90º. I hadn’t specified that. When I asked why, it explained that this is the standard range of an adjustable bench.

Because I’d described the real-world context, it filled in the gaps correctly. Context doesn’t just improve code. It unlocks the model’s broader knowledge.

Tip 6: Provide background material

AI models are trained on past data. Apple’s frameworks, especially SwiftUI, evolve quickly. That creates a gap. There simply isn’t as much high-quality, up-to-date Swift training data available to LLMs as there is for older languages like Python or JavaScript. As John Gruber recently pointed out, Swift 6 highlights this issue clearly.

While adding a Liquid Glass feature to my app, Claude insisted that iOS 26 didn’t exist. The fix wasn’t to argue with it, but to supply better context. Once I asked Claude to search the web for the relevant documentation, it implemented the feature without any problems.

When working with new APIs, include links to the relevant WWDC sessions in your prompt and ask the assistant to base its solution on the transcript. For example, when I implemented workout mirroring between iPhone and Apple Watch, including the exact WWDC video dramatically improved the results.

AI can only work with what it knows or what you give it.

Tip 7: Consult different models and escalate

If you get stuck, don’t assume the model is right. Get a second opinion.

Different AI models have different strengths, training data, and reasoning styles. If one struggles with a bug, another might spot the issue immediately. It’s like bringing in a fresh pair of eyes. For most tasks, I found Claude worked best, but when it struggled, I would turn to GPT.

It’s also worth remembering that coding assistants and chat assistants don’t always behave the same way, even when powered by similar models. Sometimes I’ve talked a problem through in ChatGPT, then shared that reasoning with Claude. The combination often worked better than either one alone.

And when all else fails, escalate. More advanced models are slower and more expensive, but they can offer deeper reasoning when you’re dealing with complex architecture or subtle bugs.

Tip 8: Use README files

When you’re building something complex, you’ll eventually hit a wall. You rewrite the prompt. You switch models. Nothing fixes it.

In my case, the app’s user interface gradually became slower and slower until it was almost unusable. The root cause was some rookie architectural mistakes. The kind that work fine in a prototype but fall apart in a real app. I eventually diagnosed the issue and fixed it. But as development continued, the same patterns kept creeping back in.

That’s when I changed strategy. Instead of just fixing the bug, I asked the assistant to write a README file documenting the architectural rules for the project. What went wrong, why it went wrong, and how to avoid it in future.

Those README files aren’t for me. They’re for the AI. They act as guardrails: use background actors for CloudKit writes, don’t block the main thread, filter large queries properly, and so on. Every time a new feature is built, the assistant refers back to those rules. In vibe coding, your README becomes part of the architecture.

Tip 9: Check every line of vibe code before committing

I don’t understand every line of code my AI assistants generate. But I read all of it before committing.

There are two reasons. First, it’s the fastest way to learn. Second, you need to be sure the assistant has done only what you intended. Nothing more, nothing less.

Some files are especially sensitive. Your data model, for example. A small change there can have serious consequences. In my case, it could mean deploying schema updates in CloudKit. Not something you want to do by accident.

If I see a change I wasn’t expecting, I always stop and ask why it was made. More than once, that conversation has revealed a misunderstanding that could have turned into a costly mistake.

AI writes code. You’re still responsible for it.

Tip 10: Treat your AI assistant like a colleague

At this point, you might notice a pattern! The easiest way to write better prompts is to stop treating AI like a vending machine for code. Treat it like a colleague.

When I work with developers, I don’t just hand over instructions and wait for them to be implemented. We share the goal. We talk through constraints. We challenge each other’s ideas. That’s how better solutions emerge.

The same mindset works surprisingly well with AI. Explain why a feature matters. Share context. Explore options. Invite alternatives. The more collaborative your approach, the better the results tend to be.

Can you really “motivate” a machine? Maybe not. But AI models are trained on human conversation. When you communicate clearly and thoughtfully, they respond in kind. Vibe coding works best when you collaborate, not command.

Now it’s your turn

A year ago, I couldn’t write a Swift app. By the time I shipped Reps & Sets, I understood SwiftUI, CloudKit, and Apple Watch integration far better than I ever expected. I learned by building, with AI as my collaborator.

Today, Reps & Sets is live on the App Store for iPhone, iPad, and Apple Watch. So, if you’re curious what vibe coding can produce in the real world, download it and see for yourself.

And if you’ve been sitting on an app idea, this might be your moment.