At today’s Platforms State of the Union, Apple went into more depth on the updates coming to their software: interactive widgets for iOS, iPadOS and now on the macOS desktop; big updates to watchOS; and the introduction of visionOS, the operating system that runs on Apple’s new Vision Pro.

There are loads of new features that developers will be able to take advantage of that Apple didn’t highlight in the main Keynote. Thus far, they’ve covered improvements to the in-app camera, a standard tips balloon, and an easier way to make animations in SwiftUI.

New features to Swift and SwiftUI

Swift and C++ are now interoperable. With a simple flag in the code, developers can bridge an existing backlog of C++ code into Swift projects, significantly reducing the overhead in big cross-platform projects.

A new feature to all platforms is Swift Macros, a lighter and easier way to add features than implementing a full API.

In SwiftUI, a big focus is being placed on animations. Animations are easy to implement. Developers specify keyframes for the animation and let the device interpolate the animation between them. The animations are freely interruptible, so they play nicely with the user interface. SF Symbols, a large library of standard icons provided by Apple, are now animated as well.

Photo: Apple

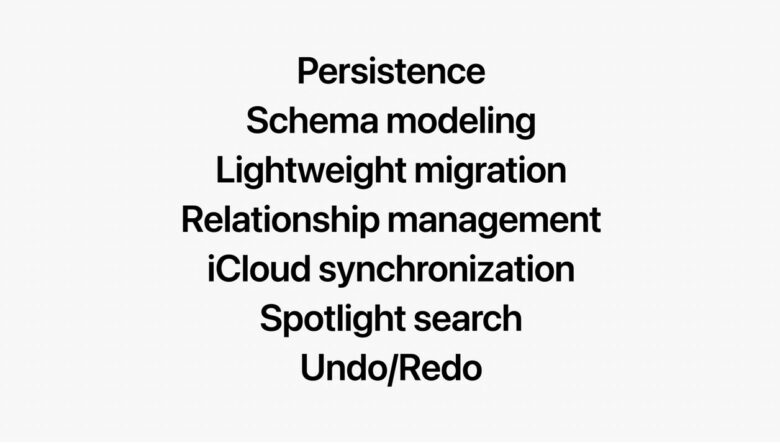

SwiftData is a new API designed to replace CoreData, built on the new Macro system. Developers can easily scale data in a fast and energy-efficient way. With native iCloud synchronization, updates to the underlying data in the app can easily save and restore data while working around all kinds of edge cases like conflicts and updates in a seamless and painless way.

Widgets get an upgrade

Photo: Apple

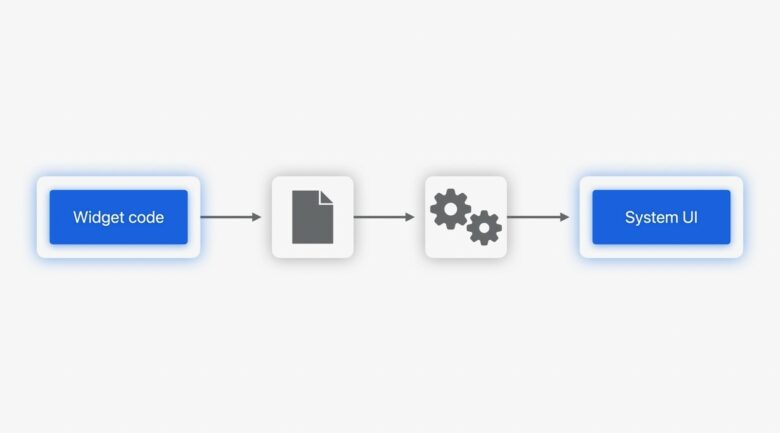

Widgets are now interactive on iOS, iPadOS and macOS. They can show up in more places — on the iPad Lock Screen, on the Standby screen on iPhone and on the macOS desktop.

Apps will generate a view in SwiftUI that is archived by the system and saved to run later whenever the user sees one. The full app doesn’t need to run in order for the widget to work, so running interactive widgets won’t kill your battery life. The widget and all its interactions can use Continuity to bring widgets from iPhone-exclusive apps onto the Mac.

Helpful tips will explain how your apps work

Photo: Apple

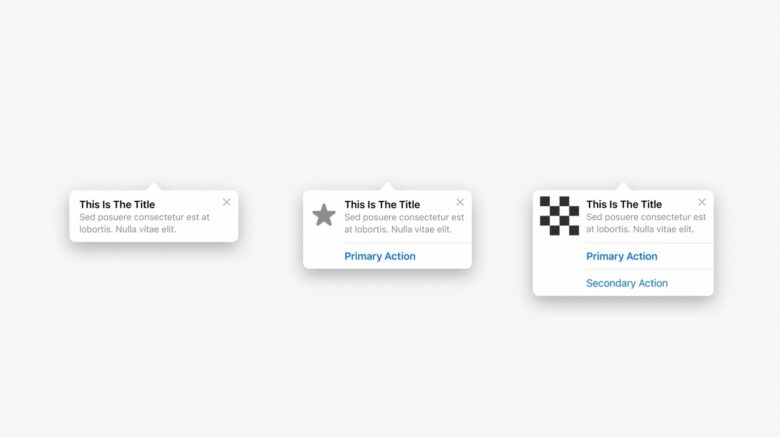

Some apps throw you into the deep end with a complicated user interface of buttons and features; some make you go through an annoying long introduction process. TipKit is a framework developers can add that will explain how apps work in a friendlier way. And best of all, it won’t slow you down with tips you’ve seen before!

Improvements to the Camera

Photo: Apple

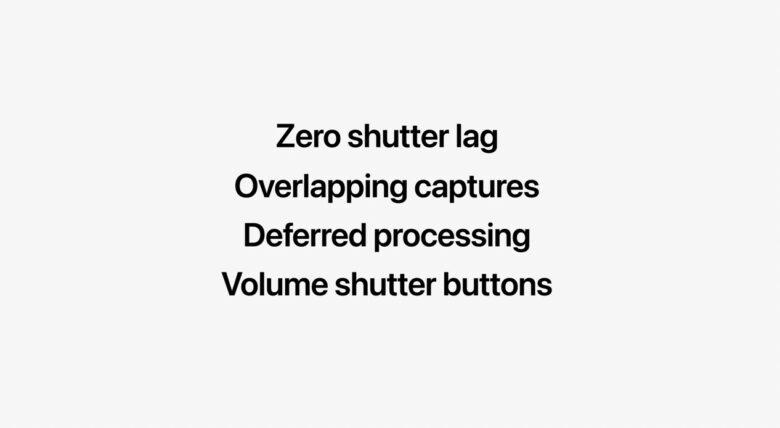

Improvements to the camera will reduce shutter lag and let other apps use volume buttons to take pictures. A complete HDR workflow will soon be possible with a new system-wide ISO standard for displaying HDR photos inside other apps.

On iPad, any USB camera can now be used. On Apple TV, external cameras and microphones can put FaceTime calls on the big screen using Continuity Camera.

“A huge design refresh” to watchOS 10 is easy to adopt

Photo: Apple

Lori Hylan-Cho, Senior Manager of the watchOS System Experience, discussed the big changes coming to Apple Watch. “This year, watchOS 10 is getting a huge design refresh,” she said. SwiftUI powers much of this redesign to be “more dynamic, more colorful and more glanceable.”

Vertical tab views let you scroll through a list of items, where each item can itself be a scrolling view of images, buttons and text. Buttons can be placed inside the toolbar at the top of the screen, pushing controls all the way to the edge to make the best of the limited screen space.

A new API in CoreMotion will give developers more insight into how the watch is moving on your wrist during a workout, thanks to the accelerometer and gyroscope. Apple demonstrated this earlier in the Keynote by showing how it can be used to analyze your golf and tennis game.

Developers get these features automatically when building for watchOS 10.

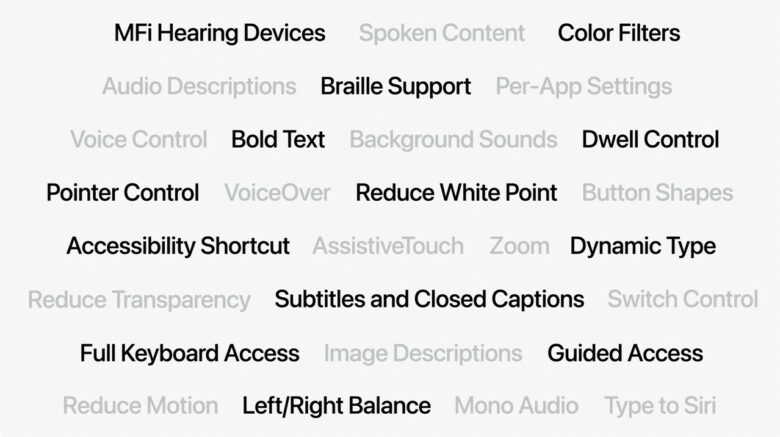

New accessibility features

Photo: Apple

Pause Animated Images will pause animations on the web and inside apps. This can help users who get nauseous from motion or flashing lights. Developers can implement a static version of an animated image to make this look seamless.

visionOS comes with many accessibility features out of the box — but more details will come on that later.

App Privacy

A new photo picker makes it easier to share only one photo with an app, without giving it access to your whole library and without going through a complicated process of sharing a selection of photos.

App Privacy Manifests are a new feature available to developers that will create more transparent and accurate app privacy labels in the App Store.

Sensitive Content Analysis is a NSFW content framework that runs entirely on your device. It processes images and videos (in a private and secure way), providing a sensitive content warning to protect users from content they don’t want to see.

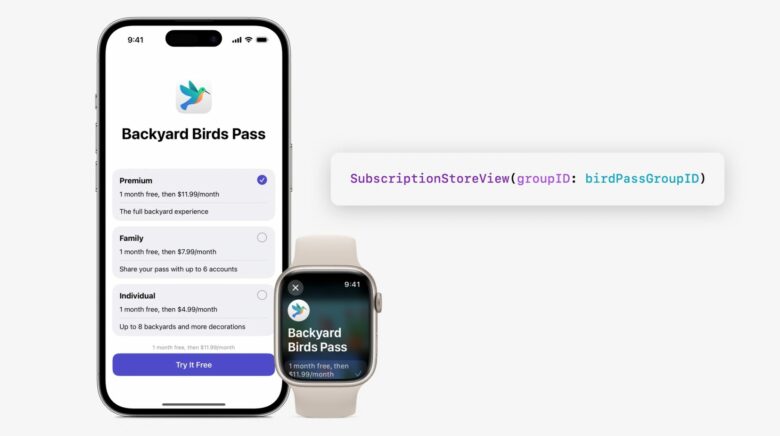

New behind-the-scenes features for developers

Photo: Apple

New features to StoreKit will make it easier for developers to create great looking subscription and in-app purchase pages in their apps — across all devices, even Apple Watch. Developers can create a subscription store view with just one single, simple line of SwiftUI code. Of course, with a couple more lines, you can customize the look and feel of the dialog. SKAdNetwork will help developers provide useful information to advertisers, like how effective ads are performing, while preserving user privacy.

In Spotlight, when you search for an app, you’ll get quick links to the features inside. When you search for “freeform,” for example, you’ll see a button to create a new board alongside the icon to launch the app. This is part of the new features coming to App Intents. This will also allow you to use Siri more naturally to launch Shortcuts.

There are expanded features in Xcode 15 for code testing. A new interface will put a screen recording timed against predefined tests, so developers can figure out exactly what’s going wrong when an app crashes under testing.

Another great improvement to Xcode is that the app size has been reduced by 50%, with all platform architectures available on-demand. This is a monumental improvement to the storage requirements necessary for Xcode, which has a tendency to suck up all the available disk space on your Mac.

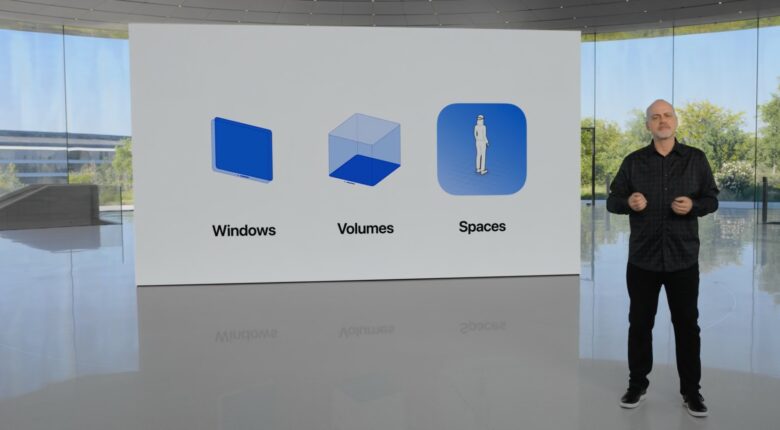

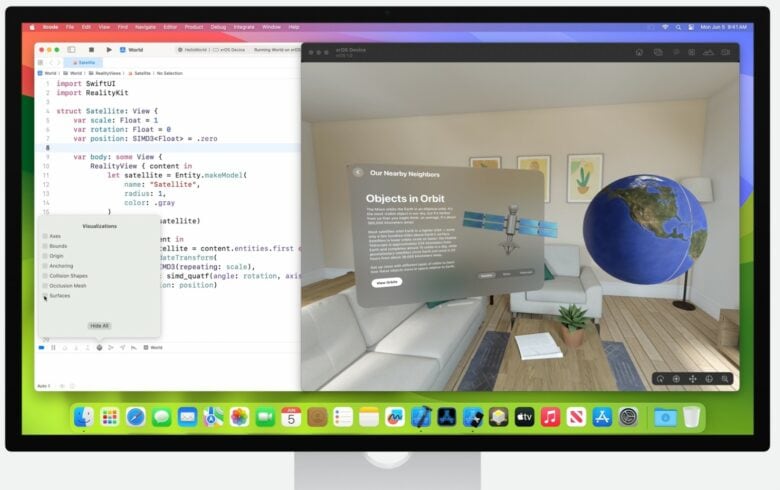

Developer tools and feature details for the Vision Pro

Photo: Apple

Mike Rockwell, Vice President of the Technology Development Group, shed some light on how apps in the Vision Pro headset work.

SwiftUI, RealityKit and ARKit are all extended into visionOS — but not the legacy UIKit from iOS or AppKit from macOS.

There are three fundamental parts to an app in visionOS.

- Apps can have one or more windows, which are SwiftUI scenes that combine 2D content with 3D objects.

- Volumes are objects that can appear interactively in your environment, “like a game board or a globe. Volumes can be moved around this space and viewed from all angles.”

- A Full Space is a background environment that replaces the room you’re in, while leaving Windows and Volumes where they are.

Apps launch into a Shared Space, where apps — their windows and volumes — can freely float around you in an infinite canvas.

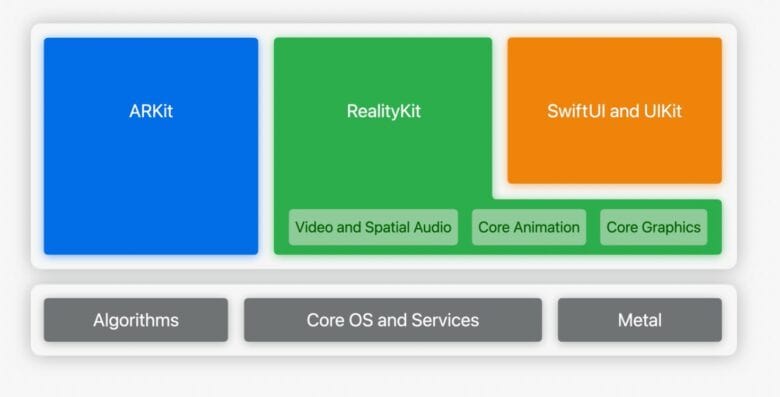

Photo: Apple

SwiftUI and UIKit run on top of RealityKit to draw 3D, volumetric user interfaces. ARKit runs seamlessly as before, placing virtual objects around the real world. The zstack is a standard user interface element to layer objects — now in visionOS, developers can give these layers real, physical depth, separating buttons and objects from the background.

Dynamic foveation will increase the clarity of rendering wherever your eyes are focused, and save energy by reducing the image where you can’t see clearly. Objects are automatically lit to match the surrounding room, so they will look natural wherever you may be.

MaterialX is the open standard for creating graphical shaders — how an object looks in the lit environment — and it’s fully supported in visionOS.

Skeletal hand tracking will allow apps the ability to clearly see where your hands and fingers are. The standard way to interact with Vision Pro is by pinching your fingers, but in the interest of accessibility, users can also interact by flicking their wrist or wiggling their head. And of course, it supports Dynamic Type to help low-vision users see the interface clearly.

Photo: Apple

The Xcode Simulator will make it easy to test your Vision Pro apps on a Mac. And if you have a Vision Pro, you can take this to the next level. Using your Mac in a floating window inside your Vision Pro, you can develop for the headset side-by-side with running your app.

And Reality Composer Pro makes it easy to interact with your 3D objects, edit their appearance, and seamlessly bring those changes back into your code.

Photo: Apple

The Unity engine runs seamlessly on the new device, making it easy to bring over cross-platform games to the new platform.

Without requiring any access to cameras, the many sensors provide apps with a detailed 3D mesh of the environment. RealityKit uses this to create realistic audio for the space.

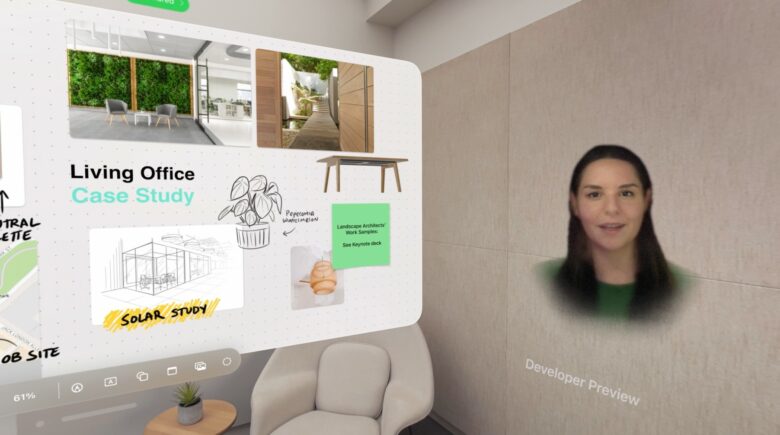

Photo: Apple

SharePlay and FaceTime are both essential to the experience of Vision Pro. While the device supports standard two-dimensional FaceTime calls right now, Spatial Personas will allow people to appear in full 3D. As you walk around on a FaceTime call, you can see around the side of someone’s head as if they were really a three-dimensional human in the room. Apple described this as a prerelease feature — which is a bit odd since this is not currently a released device.

Even more detail will be given in over 175 session videos that will be published throughout the week, 40 of which are all about Vision Pro.