“Hey Siri” can be inadvertently activated by other wake words including “A city,” “Hey Jerry,” and more, reveal researchers from Germany’s Ruhr-Universität Bochum and the Bochum Max Planck Institute.

Siri’s far from the only voice assistant with a weakness when it comes to false triggers, however. The study compiled a list of over 1,000 words that can accidentally activate different A.I. assistants.

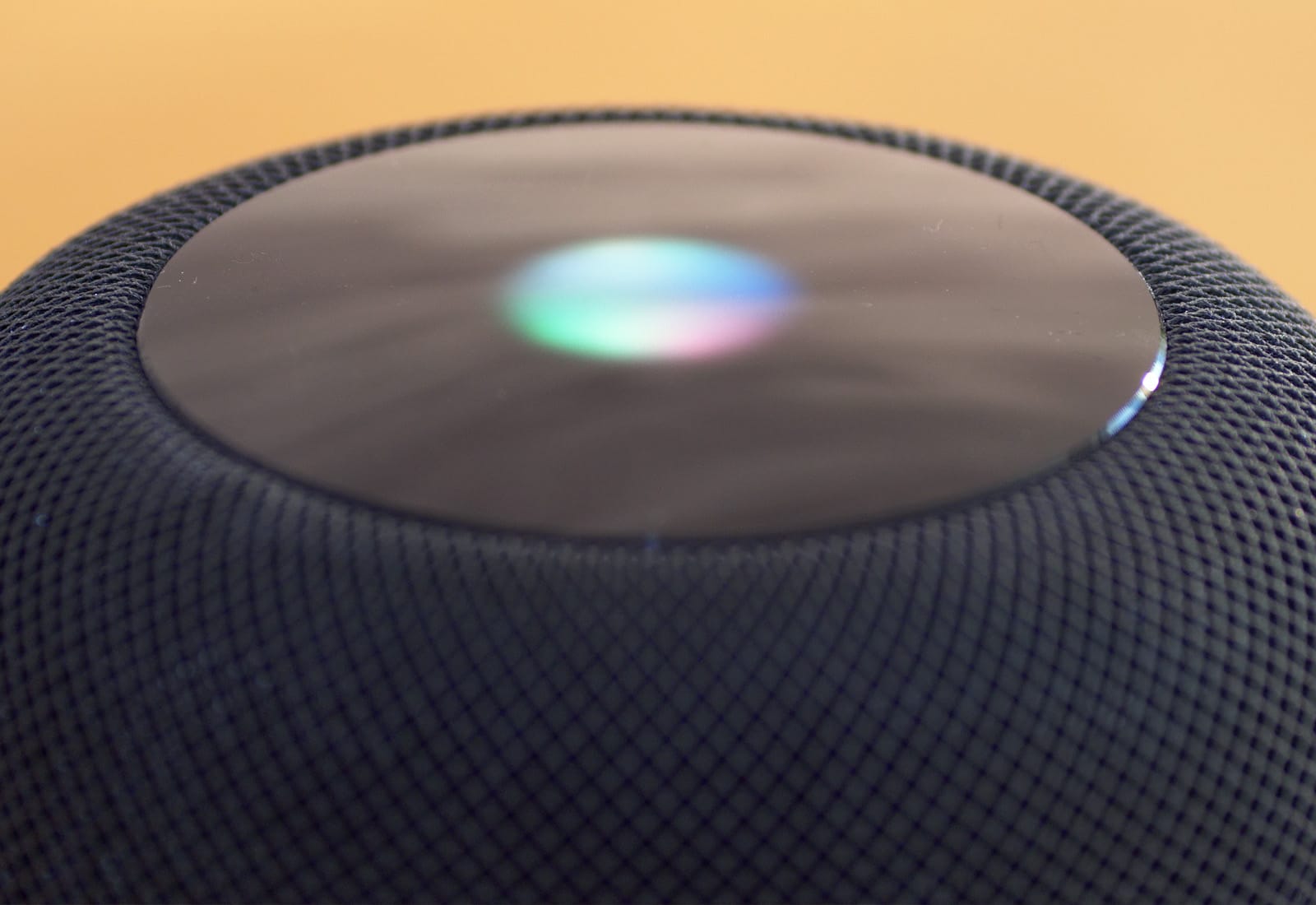

Siri and beyond: False wake words

Words in English, German, and Chinese were all found to be capable of erroneously activating smart assistants. Along with Siri, these included Amazon’s Alexa, Google Assistant, Microsoft Cortana, and more. The study showed that this could happen simply from hearing lines from shows like Modern Family and Game of Thrones. Everyday conversation can also accidentally trigger activation.

Other examples of accidental wake words included Alexa reacting to the words “unacceptable” and “election,” “OK, cool” instead of “OK, Google,” Microsoft’s Cortana being confused by “Montana,” and more.

“The devices are intentionally programmed in a somewhat forgiving manner, because they are supposed to be able to understand their humans. Therefore, they are more likely to start up once too often rather than not at all,” said Professor Dorothea Kolossa, one of the researchers involved in the study.

Privacy issues in false wake words

From a privacy perspective, the problem with accidental wake words is that it can result in audio being sent to smart assistant makers when not intended. These audio snippets may then be transcribed and read by employees of the companies in question. Even in the event that the system recognizes a false alarm, a few seconds may still be transmitted.

Last year, The Guardian broke a story about how Apple subcontractors were employed to listen to Siri recordings. Apple said that it listened to a certain portion of Siri recordings for “grading” purposes. The Guardian claimed that contractors had accidentally heard, “confidential medical information, drug deals, and recordings of couples having sex.”

A privacy vs. engineering trade-off

“From a privacy point of view, [the report about A.I. assistant wake words] is of course alarming, because sometimes very private conversations can end up with strangers,” said Thorsten Holz, another researcher on the project. “From an engineering point of view, however, this approach is quite understandable, because the systems can only be improved using such data. The manufacturers have to strike a balance between data protection and technical optimisation.”

Have you had any problems with Siri — or other smart assistants — being activated when you don’t want them to be? Let us know in the comments below.