Future iPhone software and cameras could support sign language recognition, alongside a range of other in-air interface gestures, according to a patent application published today.

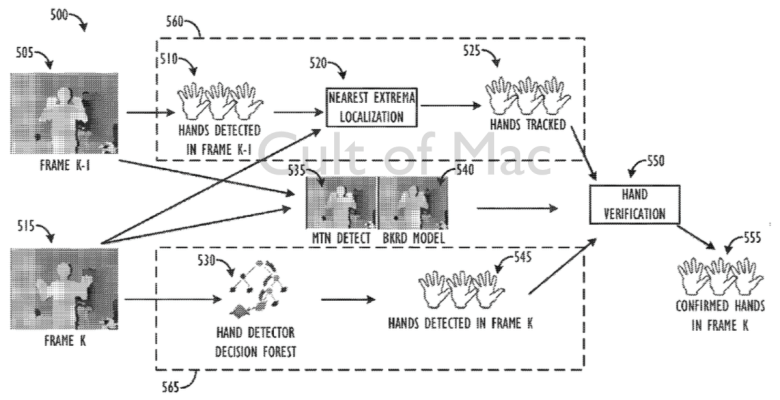

Titled “Three-Dimensional Hand Tracking Using Depth Sequences,” Apple’s patent application describes how devices would be able to locate and follow the location of hands through three-dimensional space in video streams, similar to the face-tracking technology Apple already employs for its Photo Booth app.

Photo: USPTO/Apple

There are plenty of possibilities for this kind of technology, ranging from the aforementioned ASL sign language support to pose and gesture detections for adding additional interface controls to iOS devices.

Apple has been working on this area for a while now, with its 3D head tracking patents first coming to light more than half a decade ago in 2009. They took a step forward when Apple acquired PrimeSense — the Israel-based company behind the 3D motion tracking in the original Xbox Kinect, in late 2013.

Patent applications don’t always guarantee that an actual product will end up shipping, of course, but it’s great to see evidence that Apple’s continuing to focus on new ways to push its current touch interface even further.

What possible applications could you envisage for Apple’s hand-tracking patent? Leave your comments below.

Source: USPTO

Via: Patently Apple