This week, a lot was made in the news about Siri’s supposed pro-life leanings. Essentially, a bunch of people got upset because Siri couldn’t find a local abortion clinic, even though abortion clinics don’t actually call themselves that. Apple denied that Siri had any pro-life leanings whatsoever, saying instead the service was in “beta.”

So what really happened? Well, Apple just learned its first lesson about search: you’re held responsible when the information people are expecting to see doesn’t show up in a search query, even if that information is only tangentially related to the actual words in the query. It’s a headache Google’s been dealing with for almost a decade.

Danny Sullivan over at Search Engine Land explains:

First, Siri doesn’t have answers to anything itself. It’s what we call a “meta search engine,” which is a service that sends your query off to other search engines.

Siri’s a smart meta search engine, in that it tries to search for things even though you might not have said the exact words needed to perform your search. For example, it’s been taught to understand that teeth are related to dentists, so that if you say “my tooth hurts,” it knows to look for dentists.

Unfortunately, the same thing also makes it an incredibly dumb search engine. If it doesn’t find a connection, it has a tendency to not search at all.

When I searched for condoms, Siri understood those are something sold in drug stores. That’s why it came back with that listing of drug stores. It know that condoms = drug stores.

It doesn’t know that Plan B is the brand name of an emergency contraception drug. Similarly, while it does know that Tylenol is a drug, and so gives me matches for drug stores, it doesn’t know that acetaminophen is the chemical name of Tylenol. As a result, I get nothing:

In other words, Siri’s having something of an uncanny valley problem. It’s only as smart as the search engines it is linked to like Wolfram Alpha and Yelp, but because the voice recognition is so good and the way Siri interacts with you is so lifelike, people expect her to be as smart as a person… even if she isn’t one.

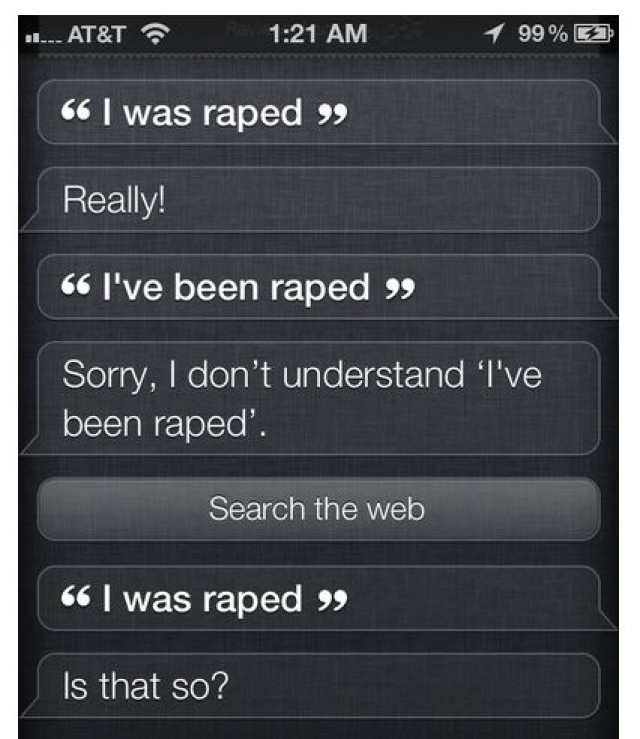

So when Apple says “Siri is a beta”, they mean it. Just like Google had to do, Apple needs to learn from experience and program Siri to understand what results to give for things like “I’ve been raped” or “I want to buy some rubbers.” Give it time, and it will, but just because Apple hasn’t figured out every possible question users can ask Siri yet doesn’t mean they’ve got an axe to grind against various philosophies, creeds, races and religions.