At the Platforms State of the Union, Apple detailed how the new Liquid Glass design works, how Apple Intelligence can be added to third-party apps, how Swift Assist in Xcode will work with third-party AI models and more.

This event goes into all the technical details behind the morning’s announcements. Apple showed how developers can update their apps with the new design and use the latest developer tools.

You can watch the Platforms State of the Union on YouTube, in the Apple Developer app or on the web. Read on below for our coverage of the event.

Platforms State of the Union at WWDC25

The biggest event at WWDC, Apple’s annual worldwide developer conference, is the Monday morning keynote where new OS updates are announced. But WWDC is actually a week-long conference, where Apple will outline in detail how developers can adopt new features in their apps before the updates are released in September.

In recent years, the Platforms State of the Union has become like a second keynote for developers. Apple goes into more depth about new developer tools, explains changes coming to the Swift programming language and goes into more technical depth on the new features.

Liquid Glass, a new unified design language

Photo: Apple

Billy Sorrentino, Apple’s Senior Director of Human Interface, went into depth about the Liquid Glass design and its APIs. “Software is the heart and soul of our products,” said Sorrentino. “The new design beautifully scales across Apple’s apps and platforms, all while maintaining the iconic experience users rely on every day.”

The goal of Liquid Glass is to create a universal design language — one that is consistent across all platforms. The glass material can reflect content around it, shine through content below it and “visually lift controls” for more prominence.

New interface elements

Photo: Apple

Controls can float on the bottom of the screen, kind of like ornaments on visionOS. Buttons on iOS in a toolbar can be grouped together, like on macOS, to indicate similar functionality. Corner radiuses are informed by the corners of the physical devices — and the shape of your finger. Nearly every control has been redesigned: text boxes are now left-aligned with larger buttons, for example.

There’s more consistency across the different platforms. iPad app layouts are more informed by macOS, and the Mac has new layout styles that are more similar to iOS.

Tab-based apps on iOS automatically adopt the new Liquid Glass floating tab bar style, as do Mac and iPad apps using a navigation sidebar. Toolbar buttons automatically morph into dropdown menus when tapped, with the glass material and animations all provided by the system.

Many UI elements will be updated automatically, with no changes to code, just by recompiling apps against iOS, iPadOS, macOS 26 and others.

Apps can easily add accessories to the bottom tab bar. This can be used for audio players, in apps like Music or Podcasts; or search fields, for easy access with one hand.

When coding in Swift UI, a simple modifier to your code can turn various buttons or other UI elements into the system glass material.

Create Liquid Glass icons

Photo: Apple

A new Home Screen style uses clear icons. These are made out of two to four different layers. A new app, Icon Composer, lets you create these custom icons on the Mac. Import vector shapes to build your icon. You can preview how the specular highlights, shadows and various visual styles will look.

New Foundation Models framework

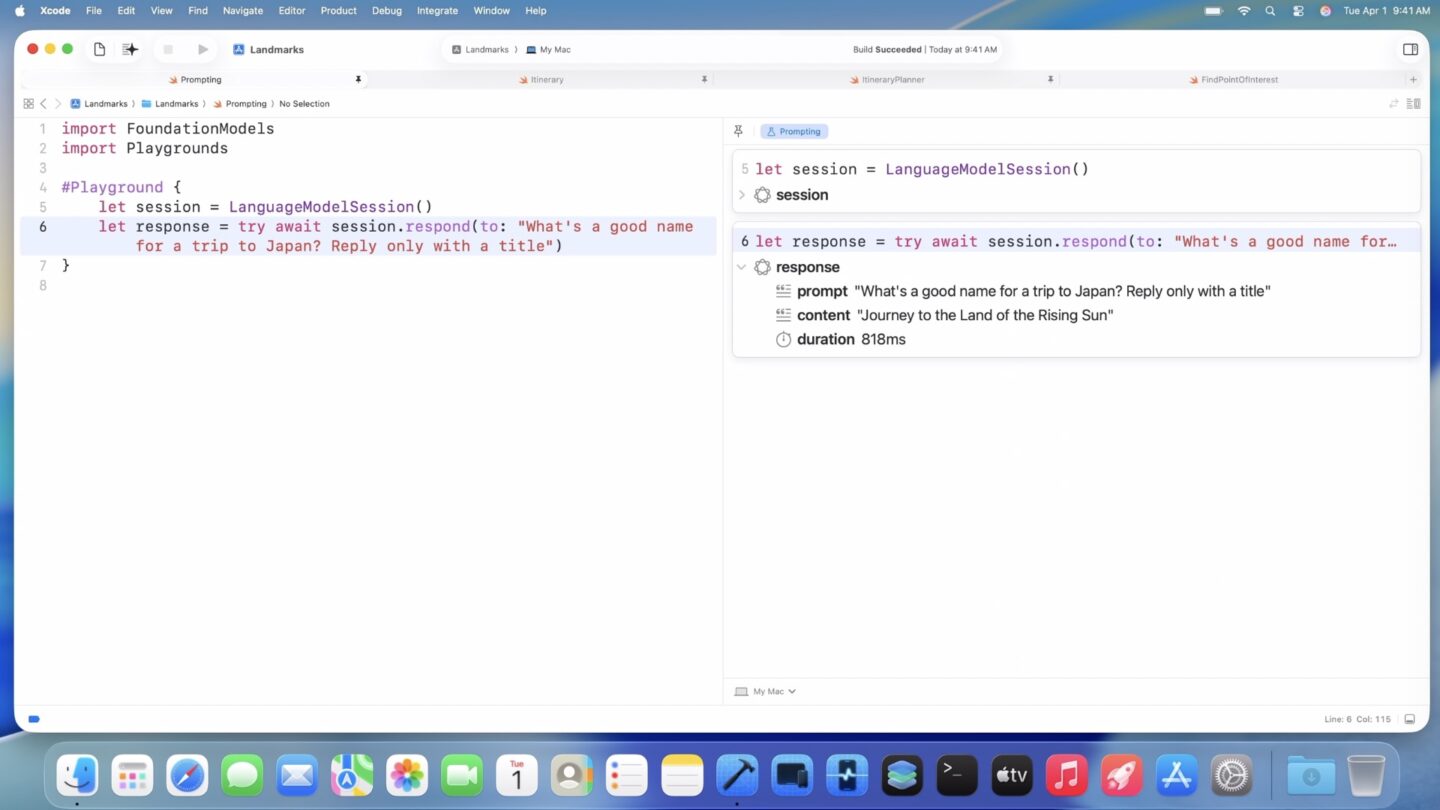

Screenshot: Apple

Now, third-party apps can build custom AI features on top of Apple’s custom Foundation model. This will let developers use the powerful on-device capabilities for free, rather than spend money on an expensive third-party service. It also works entirely offline.

Developers can prompt the model with just three lines of code. The model has been fine-tuned for many additional use cases. It also supports structured data output, so you can ensure the results you get will work in your app. Developers can build multiple different AI tools in their app — even tools that fetch online data from services like Wikipedia.

Of course, you can also build a custom model using Apple’s existing CoreML frameworks.

App Intents now superpower more parts of the system — from Spotlight on the Mac to custom search tools in Visual Intelligence on iPhone.

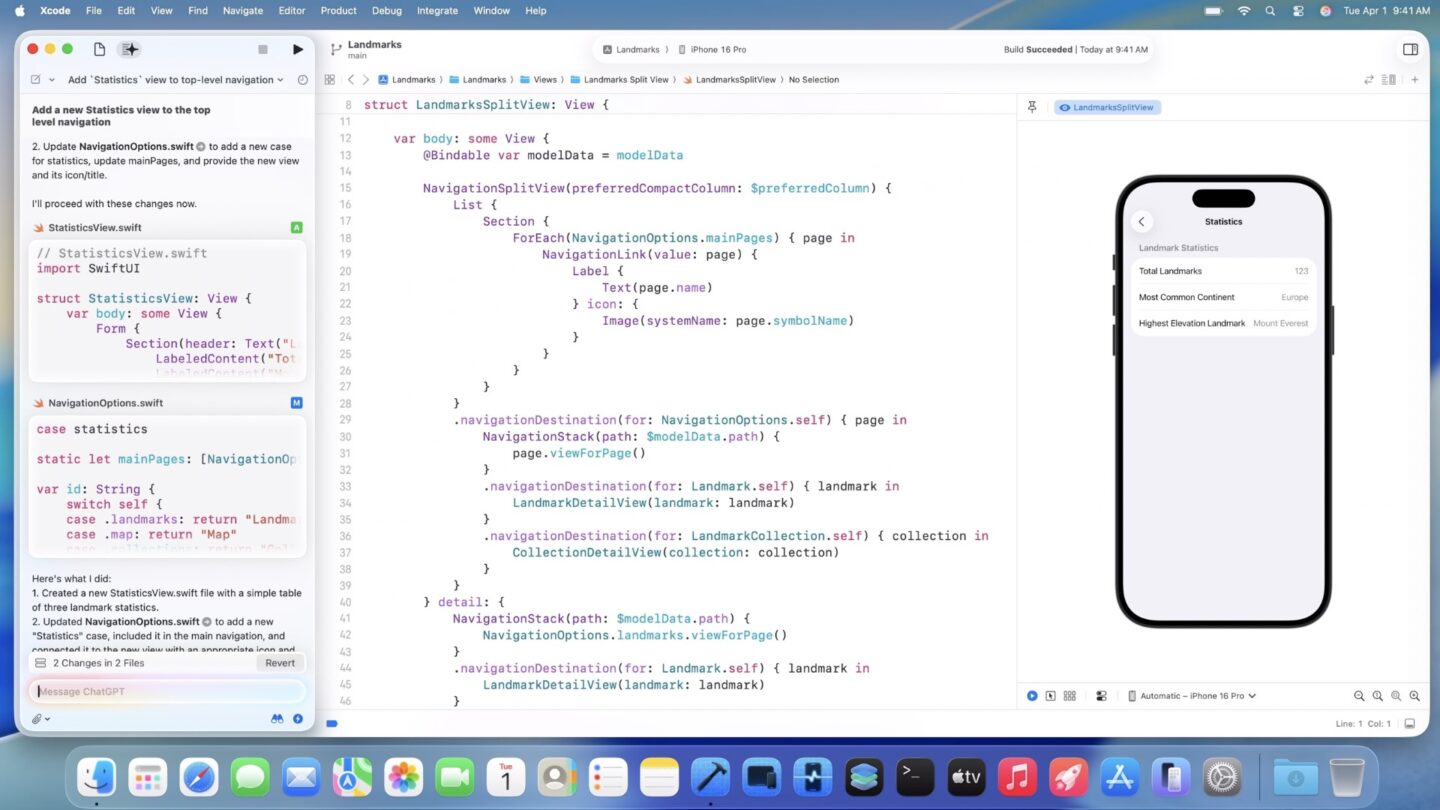

AI features in Xcode

Screenshot: Apple

Predictive code completion, introduced last year in Xcode, is even more powerful in Xcode 26.

Swift Assist, introduced last year but only available now, has expanded in scope. It now integrates third-party services. Apple demoed integration with ChatGPT to provide coding assistance from a plain language prompt.

With ChatGPT, you’ll have a limited amount of daily prompts, or you can sign in to your paid account for even more access. You can also use Claude or other models by providing an API key.

Coding Tools is a new Xcode equivalent to Apple’s system Writing Tools. You can create comments, Swift UI previews and more. When your code has a syntax or compiler error, you can even have it generate a fix for you.

If you’re scared of AI ruining your codebase, a new timeline feature lets you roll back its changes quickly.

All of these features are available today in the first Xcode 26 beta.

New Swift 6.2 features

Swift 6.2, the latest version of the open-source programming language used to build software for Apple platforms, adds the following new features:

- Arrays can be declared with a fixed size for easier memory management.

- A new

spantype allows for fast and direct access to contiguous memory, without the danger of using pointers. This can also be used to integrate C and C++ code in a more memory-safe way. Apple is already using these features securely introduce some Swift code into WebKit. - Packages are available that now integrate Swift with Java and JavaScript.

- A new toolchain lets you build WebAssembly code in Swift 6.2.

- Swift 6.2 can now explicitly mark code as single-threaded for your simple tasks that shouldn’t be parallelized.

- Containerization is a new open-source Swift tool that lets you develop, download and run Linux container images right on the Mac. This can help developers switch their cloud server code to Swift.

New Swift UI frameworks and tools

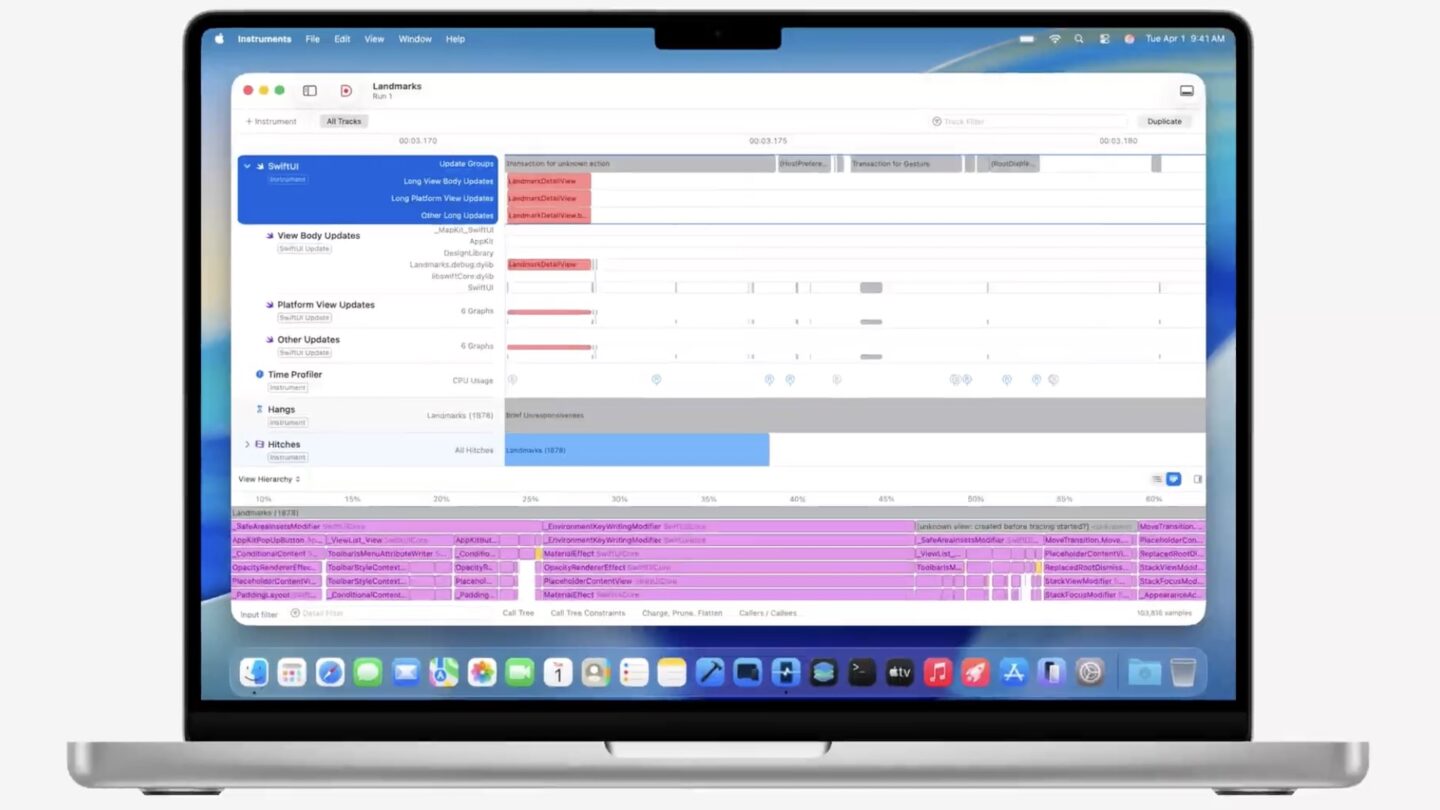

Screenshot: Apple

- SwiftUI has a new framework that makes embedding web views inside an app far easier.

- A rich text editor can create custom formatting features.

- Swift Charts can now create advanced 3D charts.

- Idle Prefetch, a feature that can improve scrolling through lists and tables, comes to the Mac with up to 6× performance improvement.

- A new Swift UI Performance instrument can help developers debug and figure out what’s slowing down their complex views.

AR features on Vision Pro

Image: Apple

- Swift UI has new features to enhance building 3D layouts, like rotation, view alignment inside a volume and more.

- Apps can be occluded or partially hidden by your environment, like walls, corners or furniture in the way.

- Apps and widgets can be pinned to physical spaces — and will remember their position in the environment when the Vision Pro is put on. These widgets are built using the very same APIs are they are on iOS and watchOS.

- You can use new SharePlay APIs to make apps and games that are shared between multiple people wearing Vision Pros in the same room.

- 2D images inside your app can be converted into Spatial Scenes, using on-device machine learning. And visionOS also supports playing back 180° and spherical video.

Metal 4 and other Mac gaming features

Photo: Apple

- Neural rendering in Metal 4 can add inference networks on top of your graphics shaders to improve lighting and geometry.

- MetalFX upscaling, frame interpolation and denoising all leverage the Neural Engine to provide smoother, higher-quality gameplay.

- The Game Porting Toolkit, a software package that converts Windows games to the Mac, now adds support for Windows upscaling features. It also lets developers customize the Metal performance HUD with the information you need to debug your game.

- You can remotely run and debug games on a Mac from Windows, for easier cross-platform development.

- Pairing game controllers and syncing them across devices is faster and easier in all the latest updates.

- Gaming on the Vision Pro is now easier with support for the PSVR 2 Sense controller and hand tracking that is up to 3× faster.

- Low-power mode can prolong user battery life while gaming by automatically reducing graphics settings.

- The new Games app on the Mac, and Games overlay in Control Center, can make competing remotely with friends easier.

Developers can dive into even more detail on exactly how to adopt the new platform features in the WWDC25 session videos.