iCloud is a cloud storage and cloud computing service developed by Apple Inc. It launched in 2011, and is designed to securely store and sync users’ data across their Apple devices, including iPhone, iPad and Mac.

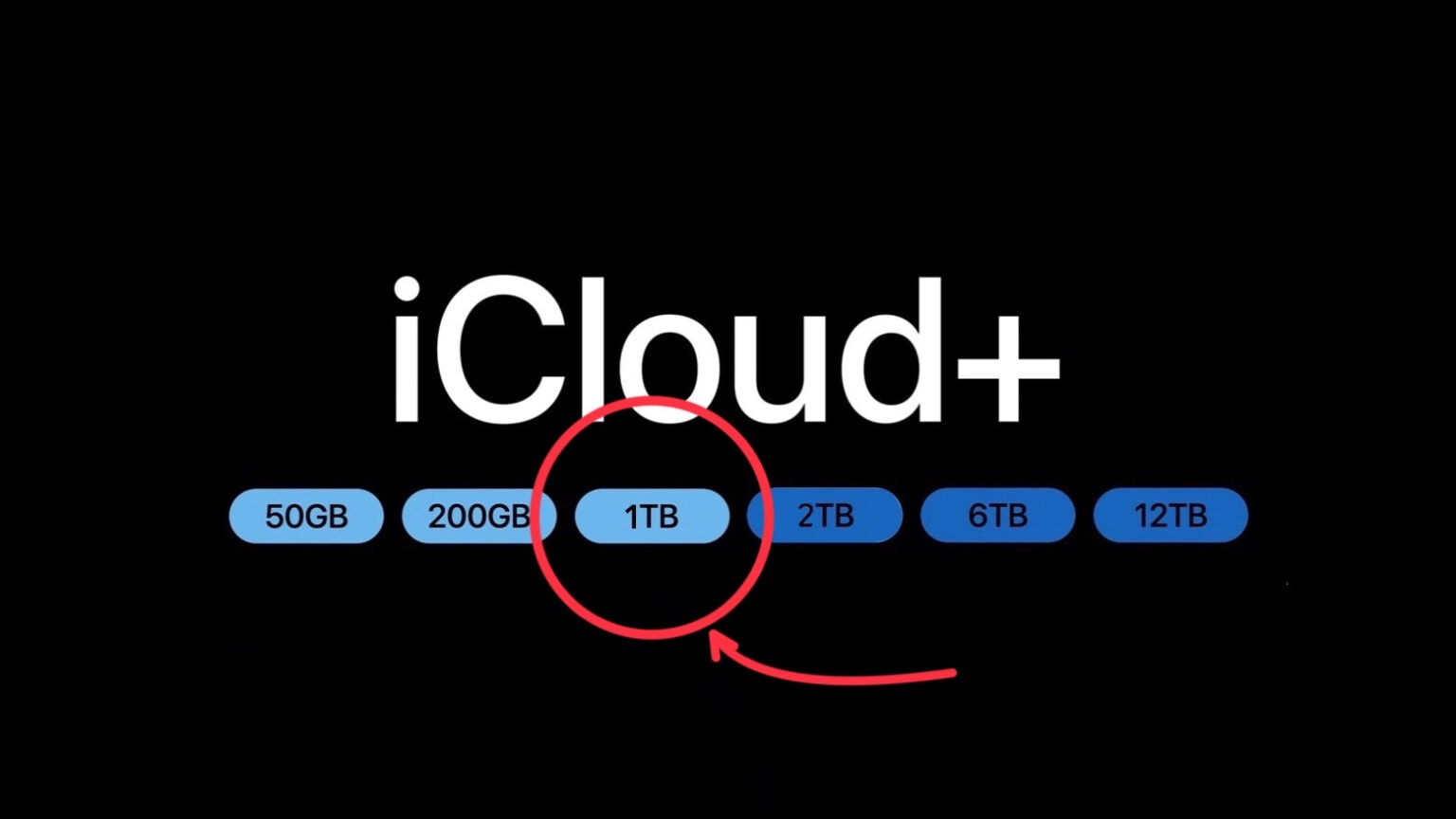

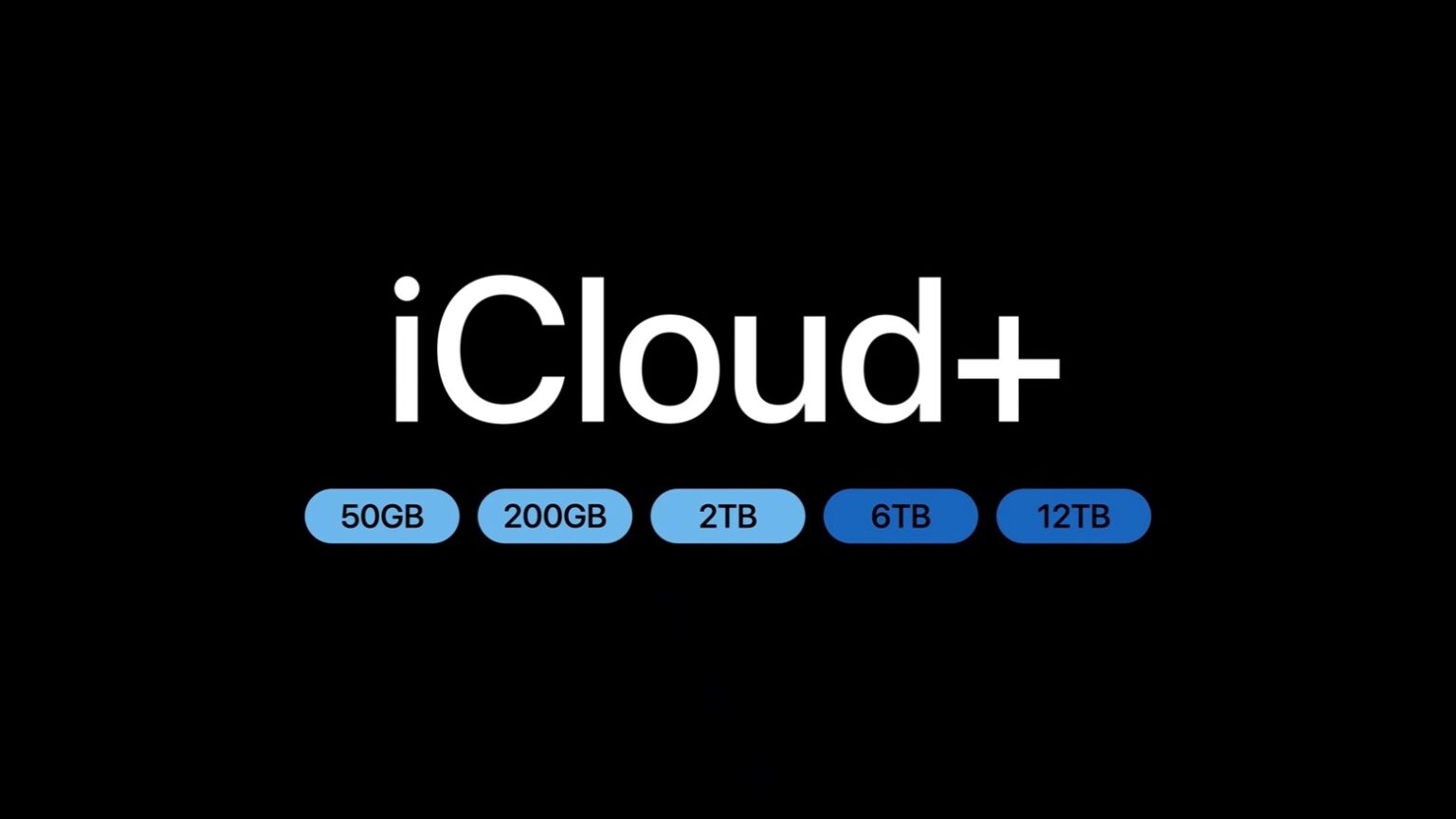

The signature feature is storage. Users can place various types of files online — including photos, videos, documents, music, app data, and more — to be accessed from all their devices. Each user gets a certain amount of free storage (5GB by default), with options to upgrade to larger storage plans for a monthly fee.

iCloud automatically syncs data across all of a user’s Apple devices, ensuring that changes made on one device are reflected on all the others. This includes contacts, calendars, reminders, notes, Safari bookmarks, and more.

In addition, the provides automatic backup functionality for iOS and iPadOS computers. When enabled, iCloud Backup backs up users’ device settings, app data, messages, photos, and more to the cloud, allowing for easy restoration in case of device loss, damage or upgrade.

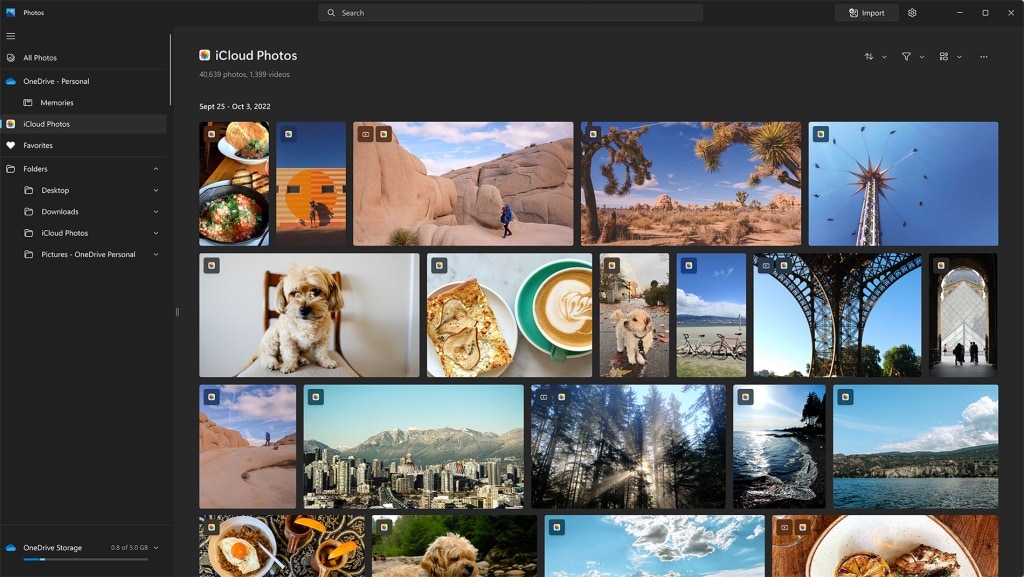

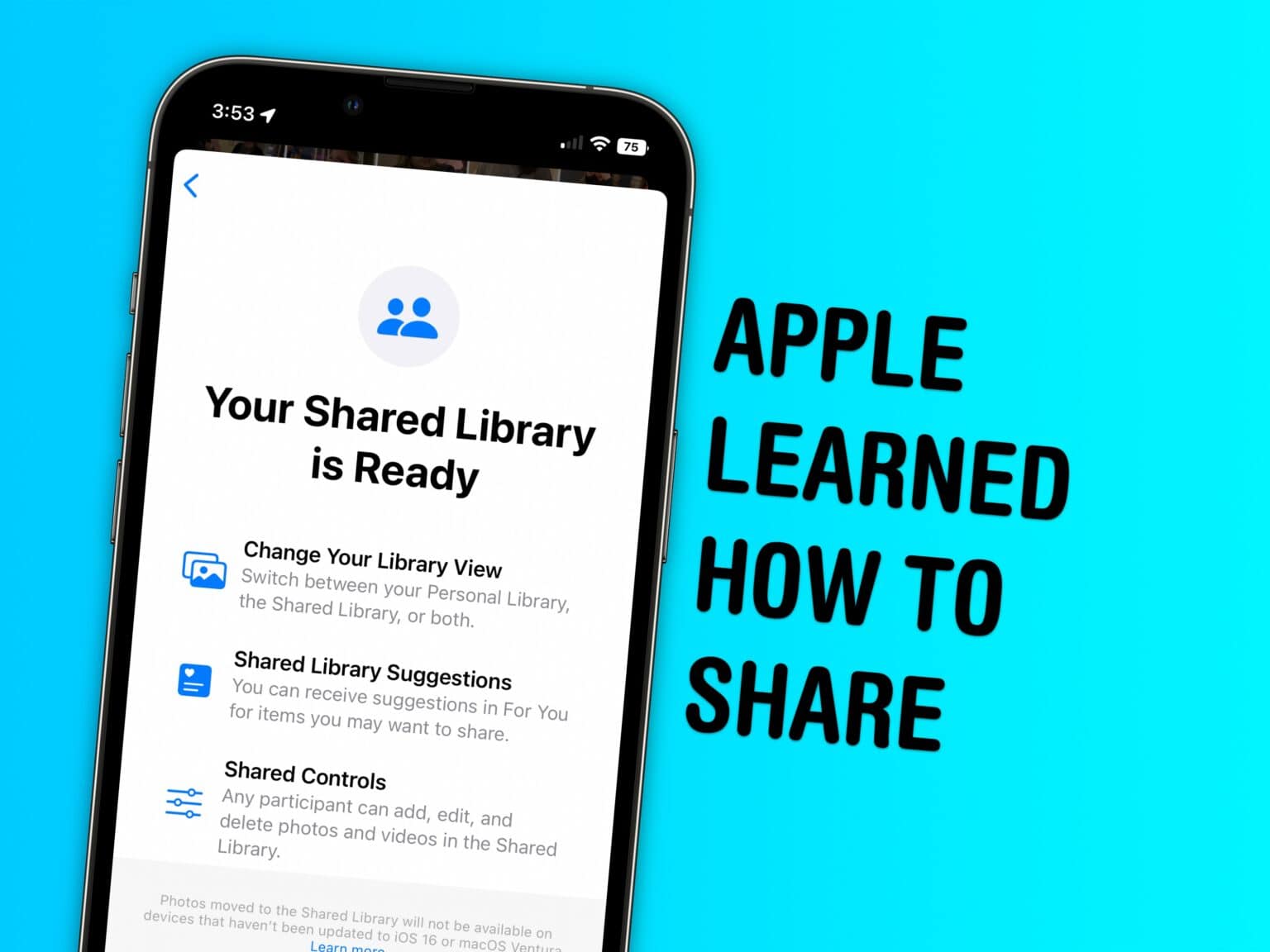

iCloud Photos automatically uploads and stores users’ pictures and videos, making them accessible across all devices. It also includes features like Shared Albums for sharing photos and videos with friends and family.

iCloud is an important part of the Find My service, which helps users locate lost or stolen devices, such as iPhone, iPad, Mac, Apple Watch, or AirPods. It also allows users to remotely lock, erase, or play a sound on their device to help locate it.

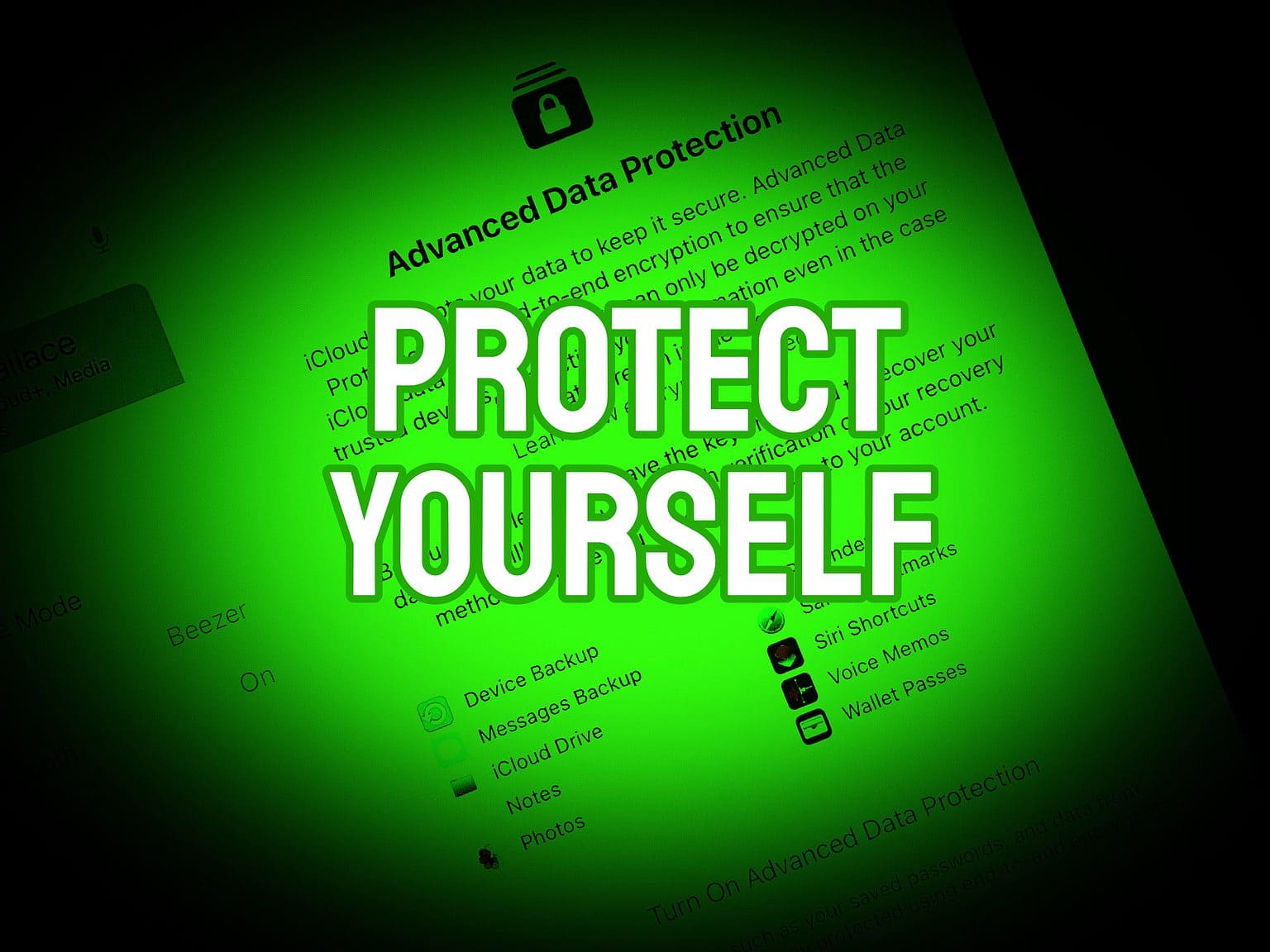

Most iCloud data is encrypted both in transit and at rest, ensuring that users’ data remains secure and private. Apple has implemented various security measures, including two-factor authentication, to protect users’ accounts and data.

Read Cult of Mac’s latest posts on iCloud:

October 12, 2011: Apple launches iCloud, a service that lets users automatically and wirelessly store content and push it to their various devices.

October 12, 2011: Apple launches iCloud, a service that lets users automatically and wirelessly store content and push it to their various devices.

![Whose ‘Wonderlust’ predictions came true? [The CultCast] Wonderlust event hits and misses - The CultCast episode 612](https://www.cultofmac.com/wp-content/uploads/2023/09/CultCast-Wonderlust-hits-misses-1536x864.jpg)

August 4, 2008: Steve Jobs owns up to mistakes in launching MobileMe, spinning Apple’s bungled cloud service rollout as a learning opportunity.

August 4, 2008: Steve Jobs owns up to mistakes in launching MobileMe, spinning Apple’s bungled cloud service rollout as a learning opportunity.![New Apple Watch Ultra, 30-inch iMac make us salivate [The CultCast] Apple Watch Ultra rumors on The CultCast episode No. 601.](https://www.cultofmac.com/wp-content/uploads/2023/06/Apple-Watch-Ultra-2-CultCast-601-1536x864.jpg)

July 1, 2012: Apple shuts down its MobileMe web service, pushing users to switch to iCloud.

July 1, 2012: Apple shuts down its MobileMe web service, pushing users to switch to iCloud.

![App Store, Apple Music and many other services all suffer outage [Updated] Apple online services having technical problems](https://www.cultofmac.com/wp-content/uploads/2021/06/0D77D148-FD1F-4747-B71D-694D9AA1BF5D-1536x864.jpeg)

![Elon Musk vs. Apple: Tech battle for the ages! [The CultCast] Elon Musk versus Apple on The CultCast podcast: Well, that escalated quickly!](https://www.cultofmac.com/wp-content/uploads/2022/12/CultCast-Elon-Musk-vs-Apple-1536x864.jpg)