Appropriately enough at a time we’re worried about touching any surface without immediately washing our hands, the U.S. Patent & Trademark Office has published an eye-tracking patent application from Apple describing a method of letting users control an interface with nothing more than a simple glance.

The application possibly sheds light on one of the features of Apple’s rumored head-mounted display for augmented and virtual reality.

Apple’s application, published Thursday and shared by Patently Apple Monday, notes that “Existing computing systems, sensors and applications do not adequately provide remote eye tracking for electronic devices that move relative to the user.”

It describes one application of eye-tracking as being for “a head mounted display (HMD) [that] moves with [the] user and can provide eye tracking.”

This is something that last year’s HTC Vive Pro Eye headset introduced. This feature makes it possible to track and analyze eye movement, attention and focus. This can be used to create more responsive and immersive virtual simulations. It can also be utilized to gain insights about user performance and interaction. That makes it possible to do things like improve training scenarios taking place in VR.

Photo: Apple

Eye-tracking patent

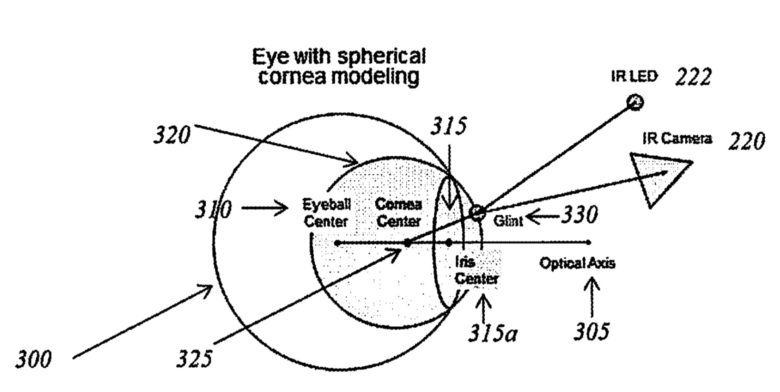

Apple’s system describes a Sensor Fusion approach in which gaze direction is identified by tracking two locations in a 3D coordinate system. It does using a single active illumination source and depth-sensing data. As the patent describes in patent-ese:

“Some implementations of the disclosure involve, at a device having one or more processors, one or more image sensors, and an illumination source, detecting a first attribute of an eye based on pixel differences associated with different wavelengths of light in a first image of the eye. These implementations next determine a first location associated with the first attribute in a three dimensional (3D) coordinate system based on depth information from a depth sensor.

Various implementations detect a second attribute of the eye based on a glint resulting from light of the illumination source reflecting off a cornea of the eye. These implementations next determine a second location associated with the second attribute in the 3D coordinate system based on the depth information from the depth sensor, and determine a gaze direction in the 3D coordinate system based on the first location and the second location.”

As the patent application explains, it could estimate gaze direction by taking multiple pictures of an eye and converting these into 3D locations. Tracking both together would let it accurately estimate gaze direction.

What does this refer to?

The fact that Apple references a head-mounted display in its patent application is no guarantee that this is what it refers to. Illustrations in the patent application also show an iPhone and the iMac. Apple might therefore be interested in this as a future interface feature across platforms.

Apple has previously explored eye-tracking in patents — including two-person eye-tracking.