Apple defends its plan to scan user photos for child sexual abuse imagery in a newly published FAQ that aims to quell growing concerns from privacy advocates.

The document provides “more clarity and transparency,” Apple said, after noting that “many stakeholders including privacy organizations and child safety organizations have expressed their support” for the move.

The FAQ explains the differences between child sexual abuse imagery scanning in iCloud and the new child-protection features coming to Apple’s Messages app. It also reassures users that Apple will not entertain government requests to expand the features.

The new FAQ comes after Apple last week confirmed it will roll out new child-safety features that include scanning for known child sexual abuse material, aka CSAM, in iCloud Photos libraries, and detecting explicit photos in Messages.

Since the announcement, a number of privacy advocates — including whistleblower Edward Snowden and the Electronic Frontier Foundation — have spoken out against the plan, which rolls out later this year.

“Even a well-intentioned effort to build such a system will break key promises of the messenger’s encryption itself and open the door to broader abuses,” the EFF warned. Apple is hoping it can ease those concerns with a new FAQ.

Apple publishes FAQ on iCloud Photos scanning

“We want to protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM),” reads the six-page document published over the weekend.

“Since we announced these features, many stakeholders including privacy organizations and child safety organizations have expressed their support of this new solution, and some have reached out with questions. This document serves to address these questions and provide more clarity and transparency in the process.”

The first concern the FAQ addresses is the difference between CSAM detection in iCloud Photos and the new communication safety tools in Messages. “The two features are not the same,” Apple states.

Clearing up confusion

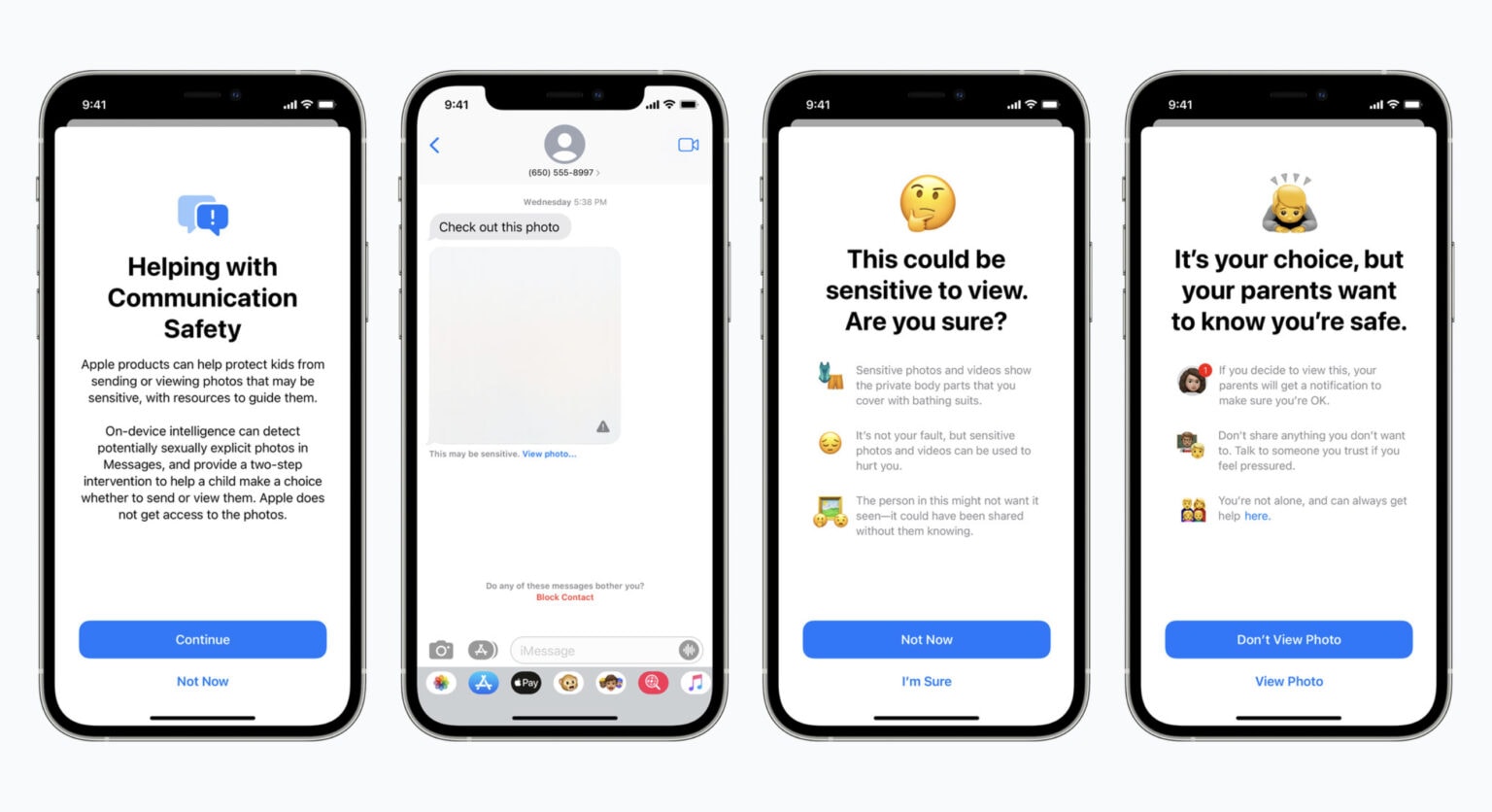

Communication safety in Messages “works only on images sent or received in the Messages app for child accounts set up in Family Sharing,” the FAQ explains. “It analyzes the images on-device, and so does not change the privacy assurances of Messages.”

When a sexually explicit image is sent or received by a child account, the image is blurred and the child will be warned about what they are sending. They will also be provided with “helpful resources,” Apple says, and “reassured that it is okay if they do not want to view or send the photo.”

Children will also be told that, to ensure they are safe, their parents will be notified if they do choose to view or send a sexually explicit image.

CSAM detection in iCloud Photos is very different. It’s designed to “keep CSAM off iCloud Photos without providing information to Apple about any photos other than those that match known CSAM images.”

“This feature only impacts users who have chosen to use iCloud Photos,” Apple adds. “There is no impact to any other on-device data” — and it does not apply to Messages, which are not scanned on an adult’s device.

Communication safety in Messages

The FAQ goes on to address various concerns about both features. On communication safety in Messages, it explains that parents or guardians must opt-in to use the feature for child accounts, and that it is available only for children age 12 or younger.

Apple never finds out when sexually explicit images are discovered in the Messages app, and no information is shared with or reported to law enforcement agencies, it says. Apple also confirms that communication safety does not break end-to-end encryption in Messages.

The FAQ also confirms that parents will not be warned about sexually explicit content in Messages unless a child chooses to view or share it. If they are warned but choose not to view or share the content, no notification is sent. And if they are age 13-17, a warning still appears but parents are not notified.

CSAM detection in iCloud Photos

On CSAM detection, Apple confirms that the feature does not scan all images stored on a user’s iPhone — only those uploaded to iCloud Photos. “And even then, Apple only learns about accounts that are storing collections of known CSAM images, and only the images that match to known CSAM.”

If you have iCloud Photos disabled, the feature does not work. And actual CSAM images are not used for comparison. “Instead of actual images, Apple uses unreadable hashes that are stored on device. These hashes are strings of numbers that represent known CSAM images, but it isn’t possible to read or convert those hashes into the CSAM images that are based on.”

“One of the significant challenges in this space is protecting children while also preserving the privacy of users,” Apple explains. “With this new technology, Apple will learn about known CSAM photos being stored in iCloud Photos where the account is storing a collection of known CSAM. Apple will not learn anything about other data stored solely on device.”

On privacy and security

The final section of the FAQ addresses the privacy and security concerns raised since Apple’s initial announcement. It confirms that CSAM detection works only on CSAM — it cannot look for anything else — and although Apple will report CSAM to law enforcement, the process is not automated.

“Apple conducts human review before making a report to NCMEC,” the FAW reads. “As a result, the system is only designed to report photos that are known CSAM in iCloud Photos. In most countries, including the United States, simply possessing these images is a crime.”

Apple also confirms it will “refuse any such demands” from governments that attempt to force Apple to detect anything other than CSAM. “We have faced demands to build and deploy government-mandated changes that degrade the privacy of users before, and have steadfastly refused those demands.”

“Let us be clear,” Apple adds, “this technology is limited to detecting CSAM stored in iCloud and we will not accede to any government’s request to expand it.”

You can read the full FAQ on Apple’s website now.