Sitting on a cash pile of billions, Apple’s not a company that’s used to being left behind. But when it comes to artificial intelligence, that’s exactly what has happened in recent years. While companies like Google and Facebook led the way with cutting-edge AI, Apple lagged. It was embarrassing for a company in Apple’s position to miss out on the single best tech revolution taking place at the moment.

But during Monday’s WWDC 2017 keynote, Apple went a long way toward making amends.

Why Apple fell behind in AI

Few things summed up the different headspaces of Apple and Google, the two biggest tech companies in the world, than their respective biggest purchases of 2014. Google invested in the future with U.K. deep learning startup DeepMind, which has since gone on to produce some of the biggest breakthroughs in AI in recent years — most notably developing the AlphaGo AI that was able to beat the world’s No. 1 ranked Go player in a three-game series.

Apple bought Beats Music.

While there’s no doubting that Beats is a cool brand popular with the all-important youth market, and that it helped form the basis for Apple Music, it’s difficult to suggest that it’s close to the significant acquisition that DeepMind was. That’s before you even take into account the fact that Google paid “just” $400 million for DeepMind, compared to Apple’s $3 billion outlay for Beats.

To many tech watchers, it looked like Apple was missing out on a tech revolution happening right under its nose.

Since then, Google has continued to both carry out research on the cutting edge of AI and to incorporate machine learning tools into its products. Apple might have debuted the AI virtual assistant with Siri, but Google Now rapidly overtook it.

Apple’s extreme secrecy, and refusal to publish any of its AI research for competitive reasons, only exacerbated the problem. AI researchers were suddenly the hottest commodity in tech — and for the first time since the “bad old days” of the 1990s, Apple wasn’t the place that the best people in the field wanted to work.

Somewhere along the way, Apple changed its stance. In 2014, Apple began using deep learning technology to improve Siri, something Google had been busy doing with its own software for a couple of years.

Last year, Apple finally agreed to let its researchers start publishing their work in academic journals. OK, so it’s only published one so far — a paper on training image recognition algorithms, titled “Learning From Simulated and Unsupervised Images Through Adversarial Training” — but it’s a start for a company that often goes to extreme lengths to keep its research secret.

Photo: Apple

AI makes Apple devices smarter

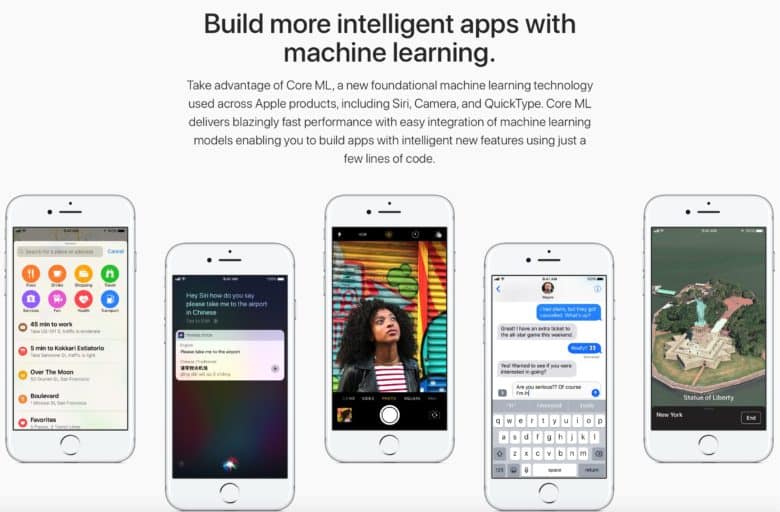

There were several big announcements at WWDC about how Apple is using machine learning in virtually all its new products. For example, watchOS 4 brings a new watch face powered by Siri. The Siri watch face customizes content in real time — including everything from traffic information and news to smart home controls and anything else the virtual assistant thinks might be relevant.

iOS 11, meanwhile, gets an improved Memories feature — letting users more easily identify things like events and people in their images — along with a better voice for Siri.

Maybe the most important announcement, however, barely got any attention onstage during an event that seemed crammed to the bursting point. This was the debut of a set of new machine learning tools and APIs created by Apple for iOS 11, called Core ML. These will let developers integrate features like image recognition and natural language processing into regular iOS apps. Features include face tracking, face detection, landmark recognition, text detection, bar code detection, object tracking and more.

To maintain Apple’s reputation for user privacy, these tools run locally on devices rather than being processed in the cloud. To help achieve this, Core ML’s toolset will be optimized in such a way that it minimizes RAM usage and power consumption.

(Apple is also rumored to be developing a chip designed specifically for handling machine learning tasks, which would make iPhones and iPads even more adept at carrying out these types of functions locally.)

On top of this, Apple now supports an open source deep-learning framework called Caffe, which lets users build and train neural nets. (Google’s own AI framework, TensorFlow, is currently notable in its absence.)

Apple turns the corner on AI

To be clear, Apple’s still got a lot of catching up to do. It may have considerably more coins in its coffers than other tech companies, but the likes of Google, Baidu and others have significantly more time invested in artificial intelligence. Cupertino’s competitors also employ many of the world’s leading AI researchers.

But Apple’s apparently turned a corner on AI — and that can only be positive.